This is Part 2 of my past weekend’s activities with SharePoint and SAP Integration methods.

In this post I am looking at how to use the BizTalk Adapter with SharePoint

Topics

- Abstract

- Goal

- Business Scenario

- Environment

- Document Flow

- Integration Steps

- .NET Support

- Summary

Abstract

In the past few years, the whole perspective of doing business has been moved towards implementing Enterprise Resource Planning Systems for the key areas like marketing, sales and manufacturing operations. Today most of the large organizations which deal with all major world markets, heavily rely on such key areas.

Operational Systems of any organization can be achieved from its worldwide network of marketing teams as well as from manufacturing and distribution techniques. In order to provide customers with realistic information, each of these systems need to be integrated as part of the larger enterprise.

This ultimately results into efficient enterprise overall, providing more reliable information and better customer service. This paper addresses the integration of Biztalk Server and Enterprise Resource Planning System and the need for their integration and their role in the current E-Business scenario.

Goal

There are several key business drivers like customers and partners that need to communicate on different fronts for successful business relationship. To achieve this communication, various systems need to get integrated that lead to evaluate and develop B2B Integration Capability and E–Business strategy. This improves the quality of business information at its disposal—to improve delivery times, costs, and offer customers a higher level of overall service.

To provide B2B capabilities, there is a need to give access to the business application data, providing partners with the ability to execute global business transactions. Facing internal integration and business–to–business (B2B) challenges on a global scale, organization needs to look for required solution.

To integrate the worldwide marketing, manufacturing and distribution facilities based on core ERP with variety of information systems, organization needs to come up with strategic deployment of integration technology products and integration service capabilities.

Business Scenario

Now take the example of this ABC Manufacturing Company: whose success is the strength of its European-wide trading relationships. Company recognizes the need to strengthen these relationships by processing orders faster and more efficiently than ever before.

The company needed a new platform that could integrate orders from several countries, accepting payments in multiple currencies and translating measurements according to each country’s standards. Now, the bottom line for ABC’s e-strategy was to accelerate order processing. To achieve this: the basic necessity was to eliminate the multiple collections of data and the use of invalid data.

By using less paper, ABC would cut processing costs and speed up the information flow. Keeping this long term goal in mind, ABC Manufacturing Company can now think of integrating its four key countries into a new business-to-business (B2B) platform.

Here is another example of this XYZ Marketing Company. Users visit on this company’s website to explore a variety of products for its thousands of customers all over the world. Now this company always understood that they could offer greater benefits to customers if they could more efficiently integrate their customers’ back-end systems. With such integration, customers could enjoy the advantages of highly efficient e-commerce sites, where a visitor on the Web could place an order that would flow smoothly from the website to the customer’s order entry system.

Some of those back-end order entry systems are built on the latest, most sophisticated enterprise resource planning (ERP) system on the market, while others are built on legacy systems that have never been upgraded. Different customers requires information formatted in different ways, but XYZ has no elegant way to transform the information coming out of website to meet customer needs. With the traditional approach:

For each new e-commerce customer on the site, XYZ’s staff needs to work for significant amounts of time creating a transformation application that would facilitate the exchange of information. But with better approach: XYZ needs a robust messaging solution that would provide the flexibility and agility to meet a range of customer needs quickly and effectively. Now again XYZ can think of integrating Customer Backend Systems with the help of business-to-business (B2B) platform.

Environment

Many large scale organizations maintain a centralized SAP environment as its core enterprise resource planning (ERP) system. The SAP system is used for the management and processing of all global business processes and practices. B2B integration mainly relies on the asynchronous messaging, Electronic Data Interchange (EDI) and XML document transformation mechanisms to facilitate the transformation and exchange of information between any ERP System and other applications including legacy systems.

For business document routing, transformation, and tracking, existing SAP-XML/EDI technology road map needs XML service engine. This will allow development of complex set of mappings from and to SAP to meet internal and external XML/EDI technology and business strategy. Microsoft BizTalk Server is the best choice to handle the data interchange and mapping requirements. BizTalk Server has the most comprehensive development and management support among business-to-business platforms. Microsoft BizTalk Server and BizTalk XML Framework version 2.0 with Simple Object Access Protocol (SOAP) version 1.1 provide precisely the kind of messaging solution that is needed to facilitate integration with cost effective manner.

Document Flow

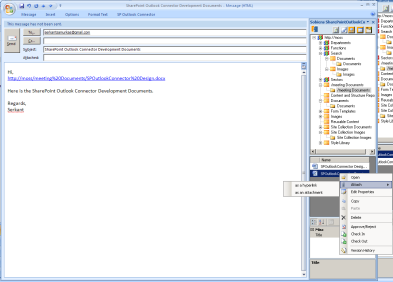

Friends, now let’s look at the actual flow of document from Source System to Customer Target System using BizTalk Server. When a document is created, it is sent to a TCP/IP-based Application Linking and Enabling (ALE) port—a BizTalk-based receive function that is used for XML conversion. Then the document passes the XML to a processing script (VBScript) that is running as a BizTalk Application Integration Component (AIC). The following figure shows how BizTalk Server acts as a hub between applications that reside in two different organizations:

The data is serialized to the customer/vendor XML format using the Extensible Stylesheet Language Transformations (XSLT) generated from the BizTalk Mapper using a BizTalk channel. The XML document is sent using synchronous Hypertext Transfer Protocol Secure (HTTPS) or another requested transport protocol such as the Simple Mail Transfer Protocol (SMTP), as specified by the customer.

The following figure shows steps for XML document transformation:

The total serialized XML result is passed back to the processing script that is running as a BizTalk AIC. An XML “receipt” document then is created and submitted to another BizTalk channel that serializes the XML status document into a SAP IDOC status message. Finally, a Remote Function Call (RFC) is triggered to the SAP instance/client using a compiled C++/VB program to update the SAP IDOC status record. A complete loop of document reconciliation is achieved. If the status is not successful, an e-mail message is created and sent to one of the Support Teams that own the customer/vendor business XML/EDI transactions so that the conflict can be resolved. All of this happens instantaneously in a completely event-driven infrastructure between SAP and BizTalk.

Integration Steps

Let’s talk about a very popular Order Entry and tracking scenario while discussing integration hereafter. The following sections describe the high-level steps required to transmit order information from Order Processing pipeline Component into the SAP/R3 application, and to receive order status update information from the SAP/R3 application.

The integration of AFS purchase order reception with SAP is achieved using the BizTalk Adapter for SAP (BTS-SAP). The IDOC handler is used by the BizTalk Adapter to provide the transactional support for bridging tRFC (Transactional Remote Function Calls) to MSMQ DTC (Distributed Transaction Coordinator). The IDOC handler is a COM object that processes IDOC documents sent from SAP through the Com4ABAP service, and ensures their successful arrival at the appropriate MSMQ destination. The handler supports the methods defined by the SAP tRFC protocol. When integrating purchase order reception with the SAP/R3 application, BizTalk Server (BTS) provides the transformation and messaging functionality, and the BizTalk Adapter for SAP provides the transport and routing functionality.

The following two sequential steps indicate how the whole integration takes place:

- Purchase order reception integration

- Order Status Update Integration

Purchase Order Reception Integration

- Suppose a new pipeline component is added to the Order Processing pipeline. This component creates an XML document that is equivalent to the

OrderForm object that is passed through the pipeline. This XML purchase order is in Commerce Server Order XML v1.0 format, and once created, is sent to a special Microsoft Message Queue (MSMQ) queue created specifically for this purpose.Writing the order from the pipeline to MSMQ:>

The first step in sending order data to the SAP/R3 application involves building a new pipeline component to run within the Order Processing pipeline. This component must perform the following two tasks:

A] Make an XML-formatted copy of the OrderForm object that is passing through the order processing pipeline. The GenerateXMLForDictionaryUsingSchema method of the DictionaryXMLTransforms object is used to create the copy.

Private Function IPipelineComponent_Execute(ByVal objOrderForm As Object, _

ByVal objContext As Object, ByVal lFlags As Long) As Long

On Error GoTo ERROR_Execute

Dim oXMLTransforms As Object

Dim oXMLSchema As Object

Dim oOrderFormXML As Object

IPipelineComponent_Execute = 1

Set oXMLTransforms = CreateObject("Commerce.DictionaryXMLTransforms")

Set oXMLSchema = oXMLTransforms.GetXMLFromFile(sSchemaLocation)

Set oOrderFormXML = oXMLTransforms.GenerateXMLForDictionaryUsingSchema_

(objOrderForm, oXMLSchema)

WritePO2MSMQ sQueueName, oOrderFormXML.xml, PO_TO_ERP_QUEUE_LABEL, _

sBTSServerName, AFS_PO_MAXTIMETOREACHQUEUE

Exit Function

ERROR_Execute:

App.LogEvent "QueuePO.CQueuePO -> Execute Error: " & _

vbCrLf & Err.Description, vbLogEventTypeError

IPipelineComponent_Execute = 2

Resume Next

End Function

B] Send the newly created XML order document to the MSMQ queue defined for this purpose.

Option Explicit

Const MQ_RECEIVE_ACCESS = 1

Const MQ_SEND_ACCESS = 2

Const MQ_PEEK_ACCESS = 32

Const MQ_DENY_RECEIVE_SHARE = 1

Const MQ_MTS_TRANSACTION = 1

Const MQ_XA_TRANSACTION = 2

Const MQ_SINGLE_MESSAGE = 3

Const MQ_ERROR_QUEUE_NOT_EXIST = -1072824317

Const MQMSG_ACKNOWLEDGMENT_FULL_REACH_QUEUE = 5

Const MQMSG_ACKNOWLEDGMENT_FULL_RECEIVE = 14

Const DEFAULT_MAX_TIME_TO_REACH_QUEUE = 20

Const MQMSG_ACKNOWLEDGMENT_FULL_REACH_QUEUE = 5

Const MQMSG_ACKNOWLEDGMENT_FULL_RECEIVE = 14

Function WritePO2MSMQ(sQueueName As String, sMsgBody As String, _

sMsgLabel As String, sServerName As String, _

Optional MaxTimeToReachQueue As Variant) As Long

Dim lMaxTime As Long

If IsMissing(MaxTimeToReachQueue) Then

lMaxTime = DEFAULT_MAX_TIME_TO_REACH_QUEUE

Else

lMaxTime = MaxTimeToReachQueue

End If

Dim objQueueInfo As MSMQ.MSMQQueueInfo

Dim objQueue As MSMQ.MSMQQueue, objAdminQueue As MSMQ.MSMQQueue

Dim objQueueMsg As MSMQ.MSMQMessage

On Error GoTo MSMQ_Error

Set objQueueInfo = New MSMQ.MSMQQueueInfo

objQueueInfo.FormatName = "DIRECT=OS:" & sServerName & "\PRIVATE$\" & sQueueName

Set objQueue = objQueueInfo.Open(MQ_SEND_ACCESS, MQ_DENY_NONE)

Set objQueueMsg = New MSMQ.MSMQMessage

objQueueMsg.Label = sMsgLabel objQueueMsg.Body = sMsgBody objQueueMsg.Ack = MQMSG_ACKNOWLEDGMENT_FULL_REACH_QUEUE

objQueueMsg.MaxTimeToReachQueue = lMaxTime

objQueueMsg.send objQueue, MQ_SINGLE_MESSAGE

objQueue.Close

On Error Resume Next

Set objQueueMsg = Nothing

Set objQueue = Nothing

Set objQueueInfo = Nothing

Exit Function

MSMQ_Error:

App.LogEvent "Error in WritePO2MSMQ: " & Error

Resume Next

End Function

- A BTS MSMQ receive function picks up the document from the MSMQ queue and sends it to a BTS channel that has been configured for this purpose. Receiving the XML order from MSMQ: The second step in sending order data to the SAP/R3 application involves BTS receiving the order data from the MSMQ queue into which it was placed at the end of the first step. You must configure a BTS MSMQ receive function to monitor the MSMQ queue to which the XML order was sent in the previous step. This receive function forwards the XML message to the configured BTS channel for transformation.

- The third step in sending order data to the SAP/R3 application involves BTS transforming the order data from Commerce Server Order XML v1.0 format into ORDERS01 IDOC format. A BTS channel must be configured to perform this transformation. After the transformation is complete, the BTS channel sends the resulting ORDERS01 IDOC message to the corresponding BTS messaging port. The BTS messaging port is configured to send the transformed message to an MSMQ queue called the 840 Queue. Once the message is placed in this queue, the BizTalk Adapter for SAP is responsible for further processing.

- BizTalk Adapter for SAP sends the ORDERS01document to the DCOM Connector (Get more information on DCOM Connector from www.sap.com/bapi), which writes the order to the SAP/R3 application. The DCOM Connector is an SAP software product that provides a mechanism to send data to, and receive data from, an SAP system. When an IDOC message is placed in the 840 Queue, the DOM Connector retrieves the message and sends it to SAP for processing. Although this processing is in the domain of the BizTalk Adapter for SAP, the steps involved are reviewed here as background information:

- Determine the version of the IDOC schema in use and generate a BizTalk Server document specification.

- Create a routing key from the contents of the Control Record of the IDOC schema.

- Request a SAP Destination from the Manager Data Store given the constructed routing key.

- Submit the IDOC message to the SAP System using the DCOM Connector 4.6D Submit functionality.

Order Status Update Integration

Order status update integration can be achieved by providing a mechanism for sending information about updates made within the SAP/R3 application back to the Commerce Server order system.

The following sequence of steps describes such a mechanism:

- BizTalk Adapter for SAP processing:

After a user has updated a purchase order using the SAP client, and the IDOC has been submitted to the appropriate tRFC port, the BizTalk Adapter for SAP uses the DCOM connector to send the resulting information to the 840 Queue, packaged as an ORDERS01 IDOC message. The 840 Queue is an MSMQ queue into which the BizTalk Adapter for SAP places IDOC messages so that they can be retrieved and processed by interested parties. This process is within the domain of the BizTalk Adapter for SAP, and is used by this solution to achieve the order update integration.

- Receiving the ORDERS01 IDOC message from MSMQ:

The second step in updating order status from the SAP/R3 application involves BTS receiving ORDERS01 IDOC message from the MSMQ queue (840 Queue) into which it was placed at the end of the first step. You must configure a BTS MSMQ receive function to monitor the 840 Queue into which the XML order status message was placed. This receive function must be configured to forward the XML message to the configured BTS channel for transformation.

- Transforming the order update from IDOC format:

Using a BTS MSMQ receive function, the document is retrieved and passed to a BTS transformation channel. The BTS channel transforms the ORDERS01 IDOC message into Commerce Server Order XML v1.0 format, and then forwards it to the corresponding BTS messaging port. You must configure a BTS channel to perform this transformation.The following BizTalk Server (BTS) map demonstrates in the prototyping of this solution for transforming an SAP ORDERS01 IDOC message into an XML document in Commerce Server Order XML v1.0 format. It allows a change to an order in the SAP/R3 application to be reflected in the Commerce Server orders database.

This map used in the prototype only maps the order ID, demonstrating how the order in the SAP/R3 application can be synchronized with the order in the Commerce Server orders database. The mapping of other fields is specific to a particular implementation, and was not done for the prototype.

< xsl:stylesheet xmlns:xsl='http://www.w3.org/1999/XSL/Transform'

xmlns:msxsl='urn:schemas-microsoft-com:xslt' xmlns:var='urn:var'

xmlns:user='urn:user' exclude-result-prefixes='msxsl var user'

version='1.0'>

< xsl:output method='xml' omit-xml-declaration='yes' />

< xsl:template match='/'>

< xsl:apply-templates select='ORDERS01'/>

< /xsl:template>

< xsl:template match='ORDERS01'>

< orderform>

'Connection from source node "BELNR" to destination node "OrderID"

< xsl:if test='E2EDK02/@BELNR'>

< xsl:attribute name='OrderID'>

; < xsl:value-of select='E2EDK02/@BELNR'/>

< /xsl:attribute>

< /xsl:if>

< /orderform>

< /xsl:template>

< /xsl:stylesheet>

The BTS message port posts the transformed order update document to the configured ASP page for further processing. The configured ASP page retrieves the message posted to it and uses the Commerce Server OrderGroupManager and OrderGroup objects to update the order status information in the Commerce Server orders database.

Updating the Commerce Server order system:

The fourth step in updating order status from the SAP/R3 application involves updating the Commerce Server order system to reflect the change in status. This is accomplished by adding the page _OrderStatusUpdate.asp to the AFS Solution Site and configuring the BTS messaging port to post the transformed XML document to that page. The update is performed using the Commerce Server OrderGroupManager and OrderGroup objects.

The routine ProcessOrderStatus is the primary routine in the page. It uses the DOM and XPath to extract enough information to find the appropriate order using the OrderGroupManager object. Once the correct order is located, it is loaded into an OrderGroup object so that any of the entries in the OrderGroup object can be updated as needed.

The following code implements page _OrderStatusUpdate.asp:

< %@ Language="VBScript" %>

< %

const TEMPORARY_FOLDER = 2

call Main()

Sub Main()

call ProcessOrderStatus( ParseRequestForm() )

End Sub

Sub ProcessOrderStatus(sDocument)

Dim oOrderGroupMgr

Dim oOrderGroup

Dim rs

Dim sPONum

Dim oAttr

Dim vResult

Dim vTracking

Dim oXML

Dim dictConfig

Dim oElement

Set oOrderGroupMgr = Server.CreateObject("CS_Req.OrderGroupManager")

Set oOrderGroup = Server.CreateObject("CS_Req.OrderGroup")

Set oXML = Server.CreateObject("MSXML.DOMDocument")

oXML.async = False

If oXML.loadXML (sDocument) Then

' Get the orderform element.

Set oElement = oXML.selectSingleNode("/orderform")

' Get the poNum.

sPONum = oElement.getAttribute("OrderID")

Set dictConfig = Application("MSCSAppConfig").GetOptionsDictionary("")

' Use ordergroupmgr to find the order by OrderID.

oOrderGroupMgr.Initialize (dictConfig.s_CatalogConnectionString)

Set rs = oOrderGroupMgr.Find(Array("order_requisition_number='" sPONum & "'"), _

Array(""), Array(""))

If rs.EOF And rs.BOF Then

'Create a new one. - Not implemented in this version.

Else

' Edit the current one.

oOrderGroup.Initialize dictConfig.s_CatalogConnectionString, rs("User_ID")

' Load the found order.

oOrderGroup.LoadOrder rs("ordergroup_id")

' For the purposes of prototype, we only update the status

oOrderGroup.Value.order_status_code = 2 ' 2 = Saved order

' Save it

vResult = oOrderGroup.SaveAsOrder(vTracking)

End If

Else

WriteError "Unable to load received XML into DOM."

End If

End Sub Function ParseRequestForm()

Dim PostedDocument

Dim ContentType

Dim CharSet

Dim EntityBody

Dim Stream

Dim StartPos

Dim EndPos

ContentType = Request.ServerVariables( "CONTENT_TYPE" )

' Determine request entity body character set (default to us-ascii).

CharSet = "us-ascii"

StartPos = InStr( 1, ContentType, "CharSet=""", 1)

If (StartPos > 0 ) then

StartPos = StartPos + Len("CharSet=""")

EndPos = InStr( StartPos, ContentType, """",1 )

CharSet = Mid (ContentType, StartPos, EndPos - StartPos )

End If

' Check for multipart MIME message.

PostedDocument = ""

if ( ContentType = "" or Request.TotalBytes = 0) then

' Content-Type is required as well as an entity body.

Response.Status = "406 Not Acceptable"

Response.Write "Content-type or Entity body is missing" & VbCrlf

Response.Write "Message headers follow below:" & VbCrlf

Response.Write Request.ServerVariables("ALL_RAW") & VbCrlf

Response.End

Else

If ( InStr( 1,ContentType,"multipart/" ) >

.NET Support

This Multi-Tier Application Environment can be implemented successfully with the help of Web portal which utilizes the Microsoft .NET Enterprise Server model. The Microsoft BizTalk Server Toolkit for Microsoft .NET provides the ability to leverage the power of XML Web services and Visual Studio .NET to build dynamic, transaction-based, fault-tolerant systems with full access to existing applications.

Summary

Microsoft BizTalk Server can help organizations quickly establish and manage Internet relationships with other organizations. It makes it possible for them to automate document interchange with any other organization, regardless of the conversion requirements and data formats used. This provides a cost-effective approach for integrating business processes across large Enterprises Resource Planning Systems. Integration process designed to facilitate collaborative e-commerce business processes. The process includes a document interchange engine, a business process execution engine, and a set of business document and server management tools. In addition, a business document editor and mapper tools are provided for managing trading partner relationships, administering server clusters, and tracking transactions.

References

![ImageGen[1]](https://sharepointsamurai.files.wordpress.com/2014/06/imagegen1.png?w=474)

One of the core concepts of Business Connectivity Services (BCS) for

One of the core concepts of Business Connectivity Services (BCS) for

RFC Adapter converts the incoming RFC calls to XML and XML messages to outgoing RFC calls. We can have both synchronous (sRFC) and asynchronous (tRFC) communication with SAP systems. The former works with Best Effort QoS (Quality of Service) while the later by Exactly Once (EO).

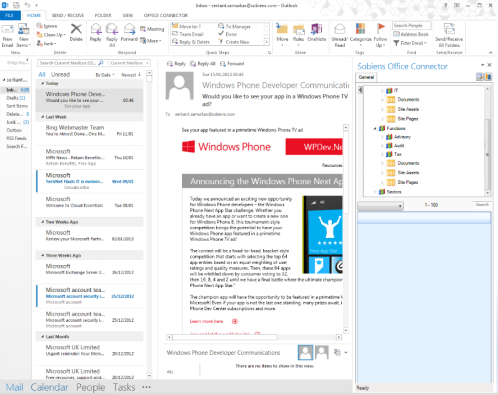

RFC Adapter converts the incoming RFC calls to XML and XML messages to outgoing RFC calls. We can have both synchronous (sRFC) and asynchronous (tRFC) communication with SAP systems. The former works with Best Effort QoS (Quality of Service) while the later by Exactly Once (EO). Now, create the relevant communication channel in the XI Integration Directory. Select the Adapter Type as RFC Sender (Please see the figure above). Specify the Application server and Gateway service of the sender SAP system. Specify the program ID. Specify exactly the same program ID that you provided while creating the RFC destination in SAP system. Note that this program ID is case-sensitive. Provide Application server details and logon credentials in the RFC metadata repository parameter. Save and activate the channel. Note that the RFC definition that you import in the Integration Repository is used only at design time. At runtime, XI loads the metadata from the sender SAP system by using the credentials provided here.

Now, create the relevant communication channel in the XI Integration Directory. Select the Adapter Type as RFC Sender (Please see the figure above). Specify the Application server and Gateway service of the sender SAP system. Specify the program ID. Specify exactly the same program ID that you provided while creating the RFC destination in SAP system. Note that this program ID is case-sensitive. Provide Application server details and logon credentials in the RFC metadata repository parameter. Save and activate the channel. Note that the RFC definition that you import in the Integration Repository is used only at design time. At runtime, XI loads the metadata from the sender SAP system by using the credentials provided here. Create a RFC destination of type ‘T’ in the SAP system as described previously. Then, go to XI Integration Repository and import the RFC Function Module STFC_CONNECTION from the SAP system. Activate your change list.

Create a RFC destination of type ‘T’ in the SAP system as described previously. Then, go to XI Integration Repository and import the RFC Function Module STFC_CONNECTION from the SAP system. Activate your change list. Accordingly, complete the remaining ID configuration objects like Sender Agreement, Receiver Determination, Interface Determination and Receiver Agreement. No Interface mapping is necessary. Activate your change list.

Accordingly, complete the remaining ID configuration objects like Sender Agreement, Receiver Determination, Interface Determination and Receiver Agreement. No Interface mapping is necessary. Activate your change list.

Figure 1. Select SAP in the Attach Data Source Wizard

Figure 1. Select SAP in the Attach Data Source Wizard Figure 2. Enter connection information in the Attach Data Source Wizard

Figure 2. Enter connection information in the Attach Data Source Wizard Figure 3. Select the BusinessPartner and Product entities in the Attach Data Source Wizard

Figure 3. Select the BusinessPartner and Product entities in the Attach Data Source Wizard Figure 4. Entity Designer showing the Product entity

Figure 4. Entity Designer showing the Product entity

Figure 5. Properties on the BusinessPartner entity have been set to the appropriate business type

Figure 5. Properties on the BusinessPartner entity have been set to the appropriate business type Figure 6. The ProductDetail entity

Figure 6. The ProductDetail entity Figure 7. Adding a relationship

Figure 7. Adding a relationship Figure 8. Configuring the relationship

Figure 8. Configuring the relationship Figure 9. Adding a new Common Screen Set

Figure 9. Adding a new Common Screen Set Figure 10. Writing “created” code on the AddEditProduct screen

Figure 10. Writing “created” code on the AddEditProduct screen Figure 11. Control layout

Figure 11. Control layout Figure 12. Changing Product Pic Url to an Image control

Figure 12. Changing Product Pic Url to an Image control Figure 13. Properties of the Product Pic Url control

Figure 13. Properties of the Product Pic Url control Figure 14. The ViewProduct screen

Figure 14. The ViewProduct screen Figure 15. The AddEditProduct screen

Figure 15. The AddEditProduct screen

is not only something that SAP is promoting (Going Mobile at SAP), but also partners, customer and analysts like Gartner or Forbes are clearly supporting this strategy.

is not only something that SAP is promoting (Going Mobile at SAP), but also partners, customer and analysts like Gartner or Forbes are clearly supporting this strategy.