Believe it or not, sometimes app developers write code that doesn’t work. Or the code works but is terribly inefficient and hogs memory. Worse yet, inefficient code results in a poor UX, driving users crazy and compelling them to uninstall the app and leave bad reviews.

I’m going to explore common performance and efficiency problems you might encounter while building Windows Store apps with JavaScript. In this article, I take a look at best practices for error handling using the Windows Library for JavaScript (WinJS). In a future article, I’ll discuss techniques for doing work without blocking the UI thread, specifically using Web Workers or the new WinJS.Utilities.Scheduler API in WinJS 2.0, as found in Windows 8.1. I’ll also present the new predictable-object lifecycle model in WinJS 2.0, focusing particularly on when and how to dispose of controls.

For each subject area, I focus on three things:

- Errors or inefficiencies that might arise in a Windows Store app built using JavaScript.

- Diagnostic tools for finding those errors and inefficiencies.

- WinJS APIs, features and best practices that can ameliorate specific problems.

I provide some purposefully buggy code but, rest assured, I indicate in the code that something is or isn’t supposed to work.

I use Visual Studio 2013, Windows 8.1 and WinJS 2.0 for these demonstrations. Many of the diagnostic tools I use are provided in Visual Studio 2013. If you haven’t downloaded the most-recent versions of the tools, you can get them from the Windows Dev Center (bit.ly/K8nkk1). New diagnostic tools are released through Visual Studio updates, so be sure to check for updates periodically.

I assume significant familiarity with building Windows Store apps using JavaScript. If you’re relatively new to the platform, I suggest beginning with the basic “Hello World” example (bit.ly/vVbVHC) or, for more of a challenge, the Hilo sample for JavaScript (bit.ly/SgI0AA).

Setting up the Example

First, I create a new project in Visual Studio 2013 using the Navigation App template, which provides a good starting point for a basic multipage app. I also add a NavBar control (bit.ly/14vfvih) to the default.html page at the root of the solution, replacing the AppBar code the template provided. Because I want to demonstrate multiple concepts, diagnostic tools and programming techniques, I’ll add a new page to the app for each demonstration. This makes it much easier for me to navigate between all the test cases.

The complete HTML markup for the NavBar is shown in Figure 1. Copy and paste this code into your solution if you’re following along with the example.

Figure 1 The NavBar Control

<!-- The global navigation bar for the app. -->

<div id="navBar" data-win-control="WinJS.UI.NavBar">

<div id="navContainer"

data-win-control="WinJS.UI.NavBarContainer">

<div id="homeNav"

data-win-control="WinJS.UI.NavBarCommand"

data-win-options="{

location: '/pages/home/home.html',

icon: 'home',

label: 'Home page'

}">

</div>

<div id="handlingErrors"

data-win-control="WinJS.UI.NavBarCommand"

data-win-options="{

location: '/pages/handlingErrors/handlingErrors.html',

icon: 'help',

label: 'Handling errors'

}">

</div>

<div id="chainedAsync"

data-win-control="WinJS.UI.NavBarCommand"

data-win-options="{

location: '/pages/chainedAsync/chainedAsync.html',

icon: 'link',

label: 'Chained asynchronous calls'

}">

</div>

</div>

</div>

For more information about building a navigation bar, check out some of the Modern Apps columns by Rachel Appel, such as the one at msdn.microsoft.com/magazine/dn342878.

You can run this project with just the navigation bar, except that clicking any of the navigation buttons will raise an exception in navigator.js. Later in this article, I’ll discuss how to handle errors that come up in navigator.js. For now, remember the app always starts on the homepage and you need to right-click the app to bring up the navigation bar.

Handling Errors

Obviously, the best way to avoid errors is to release apps that don’t raise errors. In a perfect world, every developer would write perfect code that never crashes and never raises an exception. That perfect world doesn’t exist.

As much as users prefer apps that are completely error-free, they are exceptionally good at finding new and creative ways to break apps—ways you never dreamed of. As a result, you need to incorporate robust error handling into your apps.

Errors in Windows Store apps built with JavaScript and HTML act just like errors in normal Web pages. When an error happens in a Document Object Model (DOM) object that allows for error handling (for example, the <script>, <style> or <img> elements), the onerror event for that element is raised. For errors in the JavaScript call stack, the error travels up the chain of calls until caught (in a try/catch block, for instance) or until it reaches the window object, raising the window.onerror event.

WinJS provides several layers of error-handling opportunities for your code in addition to what’s already provided to normal Web pages by the Microsoft Web Platform. At a fundamental level, any error not trapped in a try/catch block or the onError handler applied to a WinJS.Promise object (in a call to the then or done methods, for example) raises the WinJS.Application.onerror event. I’ll examine that shortly.

In practice, you can listen for errors at other levels in addition to Application.onerror. With WinJS and the templates provided by Visual Studio, you can also handle errors at the page-control level and at the navigation level. When an error is raised while the app is navigating to and loading a page, the error triggers the navigation-level error handling, then the page-level error handling, and finally the application-level error handling. You can cancel the error at the navigation level, but any event handlers applied to the page error handler will still be raised.

In this article, I’ll take a look at each layer of error handling, starting with the most important: the Application.onerror event.

Application-Level Error Handling

WinJS provides the WinJS.Application.onerror event (bit.ly/1cOctjC), your app’s most basic line of defense against errors. It picks up all errors caught by window.onerror.” It also catches promises that error out and any errors that occur in the process of managing app model events. Although you can apply an event handler to the window.onerror event in your app, you’re better off just using Application.onerror for a single queue of events to monitor.

Once the Application.onerror handler catches an error, you need to decide how to address it. There are several options:

- For critical blocking errors, alert the user with a message dialog. A critical error is one that affects continued operation of the app and might require user input to proceed.

- For informational and non-blocking errors (such as a failure to sync or obtain online data), alert the user with a flyout or an inline message.

- For errors that don’t affect the UX, silently swallow the error.

- In most cases, write the error to a tracelog (especially one that’s hooked up to an analytics engine) so you can acquire customer telemetry. For available analytics SDKs, visit the Windows services directory at services.windowsstore.com and click on Analytics (under “By service type”) in the list on the left.

For this example, I’ll stick with message dialogs. I open up default.js (/js/default.js) and add the code shown in Figure 2 inside the main anonymous function, below the handler for the app.oncheckpoint event.

Figure 2 Adding a Message Dialog

app.onerror = function (err) {

var message = err.detail.errorMessage ||

(err.detail.exception && err.detail.exception.message) ||

"Indeterminate error";

if (Windows.UI.Popups.MessageDialog) {

var messageDialog =

new Windows.UI.Popups.MessageDialog(

message,

"Something bad happened ...");

messageDialog.showAsync();

return true;

}

}

In this example, the error event handler shows a message telling the user an error has occurred and what the error is. The event handler returns true to keep the message dialog open until the user dismisses it. (Returning true also informs the WWAHost.exe process that the error has been handled and it can continue.)

Senior C# & SharePoint Developer with 10 year’s development experience

Senior C# & SharePoint Developer with 10 year’s development experience

BSC degree in Computer Science and Information Systems

5 years experience in delivering SharePoint based solutions using OOB functionality and Custom Development

Extensive experience in

• Microsoft SharePoint platform, App Model (2010 & 2013)

• C# 2.0 – 4.5

• Advanced Workflow (Visual Studio, K2, Nintex)

• Development of Custom Web Parts

• Master Page Dev & Branding

• Integration of Back-end systems, including 3 SAP Projects, MS CRM, K2 BlackPearl,

Custom LOB Systems

• SQL Server (design,development, stored procedures, triggers)

• BCS, BDC – Implementing WCF, REST Services, Web Services

• SharePoint Excel Services, PowerPivot, Word Automation Services

• Custom Reports (MS SQL Reporting, Crystal Reports)

• Objected Oriented Programming and Patterns

• TFS 2010-2013

• Agile & SCRUM methodologies (ALM / SDLC)

• Microsoft Azure as database and hosting hybrid solutions

• Office 365 and SharePoint App Development

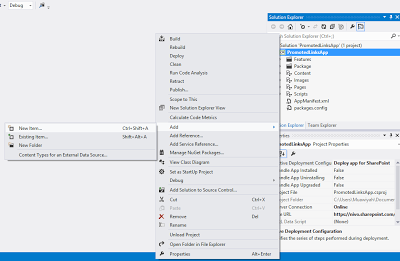

Now I’ll create some errors for this code to handle. I’ll create a custom error, throw the error and then catch it in the event handler. For this first example, I add a new folder named handlingErrors to the pages folder. In the folder, I add a new Page Control by right-clicking the project in Solution Explorer and selecting Add | New Item. When I add the handlingErrors Page Control to my project, Visual Studio provides three files in the handlingErrors folder (/pages/handlingErrors): handlingErrors.html, handlingErrors.js and handlingErrors.css.

I open up handlingErrors.html and add this simple markup inside the <section> tag of the body:

<!-- When clicked, this button raises a custom error. -->

<button id="throwError">Throw an error!</button>

Next, I open handlingErrors.js and add an event handler to the button in the ready method of the PageControl object, as shown in Figure 3. I’ve provided the entire PageControl definition in handlingErrors.js for context.

Figure 3 Definition of the handlingErrors PageControl

// For an introduction to the Page Control template, see the following documentation:

// http://go.microsoft.com/fwlink/?LinkId=232511

(function () {

"use strict";

WinJS.UI.Pages.define("/pages/handlingErrors/handlingErrors.html", {

ready: function (element, options) {

// ERROR: This code raises a custom error.

throwError.addEventListener("click", function () {

var newError = new WinJS.ErrorFromName("Custom error",

"I'm an error!");

throw newError;

});

},

unload: function () {

// Respond to navigations away from this page.

},

updateLayout: function (element) {

// Respond to changes in layout.

}

});

})();

Now I press F5 to run the sample, navigate to the handlingErrors page and click the “Throw an error!” button. (If you’re following along, you’ll see a dialog box from Visual Studio informing you an error has been raised. Click Continue to keep the sample running.) A message dialog then pops up with the error, as shown in Figure 4.

Figure 4 The Custom Error Displayed in a Message Dialog

Custom Errors

The Application.onerror event has some expectations about the errors it handles. The best way to create a custom error is to use the WinJS.ErrorFromName object (bit.ly/1gDESJC). The object created exposes a standard interface for error handlers to parse.

To create your own custom error without using the ErrorFromName object, you need to implement a toString method that returns the message of the error.

Otherwise, when your custom error is raised, both the Visual Studio debugger and the message dialog show “[Object object].” They each call the toString method for the object, but because no such method is defined in the immediate object, it goes through the chain of prototype inheritance for a definition of toString. When it reaches the Object primitive type that does have a toString method, it calls that method (which just displays information about the object).

Page-Level Error Handling

The PageControl object in WinJS provides another layer of error handling for an app. WinJS will call the IPageControlMembers.error method when an error occurs while loading the page. After the page has loaded, however, the IPageControlMembers.error method errors are picked up by the Application.onerror event handler, ignoring the page’s error method.

I’ll add an error method to the PageControl that represents the handleErrors page. The error method writes to the JavaScript console in Visual Studio using WinJS.log. The logging functionality needs to be started up first, so I need to call WinJS.Utilities.startLog before I attempt to use that method. Also note that I check for the existence of the WinJS.log member before I actually call it.

The complete code for handleErrors.js (/pages/handleErrors/handleErrors.js) is shown in Figure 5.

Figure 5 The Complete handleErrors.js

(function () {

"use strict";

WinJS.UI.Pages.define("/pages/handlingErrors/handlingErrors.html", {

ready: function (element, options) {

// ERROR: This code raises a custom error.

throwError.addEventListener("click", function () {

var newError = {

message: "I'm an error!",

toString: function () {

return this.message;

}

};

throw newError;

})

},

error: function (err) {

WinJS.Utilities.startLog({ type: "pageError", tags: "Page" });

WinJS.log && WinJS.log(err.message, "Page", "pageError");

},

unload: function () {

// TODO: Respond to navigations away from this page.

},

updateLayout: function (element) {

// TODO: Respond to changes in layout.

}

});

})();

WinJS.log

The call to WinJS.Utilities.startLog shown in Figure 5 starts the WinJS.log helper function, which writes output to the JavaScript console by default. While this helps greatly during design time for debugging, it doesn’t allow you to capture error data after users have installed the app.

For apps that are ready to be published and deployed, you should consider creating your own implementation of WinJS.log that calls into an analytics engine. This allows you to collect telemetry data about your app’s performance so you can fix unforeseen bugs in future versions of your app. Just make sure customers are aware of the data collection and that you clearly list what data gets collected by the analytics engine in your app’s privacy statement.

Note that when you overwrite WinJS.log in this way, the WinJS.log function will catch all output that would otherwise go to the JavaScript console, including things like status updates from the Scheduler. This is why you need to pass a meaningful name and type value into the call to WinJS.Utilities.startLog so you can filter out any errors you don’t want.

Now I’ll try running the sample and clicking “Throw an error!” again. This results in the exact same behavior as before: Visual Studio picks up the error and then the Application.onerror event fires. The JavaScript console doesn’t show any messages related to the error because the error was raised after the page loaded. Thus, the error was picked up only by the Application.onerror event handler.

So why use the PageControl error handling? Well, it’s particularly helpful for catching and diagnosing errors in WinJS controls that are created declaratively in the HTML. For example, I’ll add the following HTML markup inside the <section> tags of handleErrors.html (/pages/handleErrors/handleErrors.html), below the button:

<!-- ERROR: AppBarCommands must be button elements by default

unless specified otherwise by the 'type' property. -->

<div data-win-control="WinJS.UI.AppBarCommand"></div>

Now I press F5 to run the sample and navigate to the handleErrors page. Again, the message dialog appears until dismissed. However, the following message appears in the JavaScript console (you’ll need to switch back to the desktop to check this):

pageError: Page: Invalid argument: For a button, toggle, or flyout command, the element must be null or a button element

Note that the app-level error handling appeared even though I handled the error in the PageControl (which logged the error). So how can I trap an error on a page without having it bubble up to the application?

The best way to trap a page-level error is to add error handling to the navigation code. I’ll demonstrate that next.

Navigation-Level Error Handling

When I ran the previous test where the app.onerror event handler handled the page-level error, the app seemed to stay on the homepage. Yet, for some reason, a Back button control appeared. When I clicked the Back button, it took me to a (disabled) handlingErrors.html page.

This is because the navigation code in navigator.js (/js/navigator.js), which is provided in the Navigation App project template, still attempts to navigate to the page even though the page has fizzled. Furthermore, it navigates back to the homepage and adds the error-prone page to the navigation history. That’s why I see the Back button on the homepage after I’ve attempted to navigate to handlingErrors.html.

To cancel the error in navigator.js, I replace the PageControlNavigator._navigating function with the code in Figure 6. You see that the navigating function contains a call to WinJS.UI.Pages.render, which returns a Promise object. The render method attempts to create a new PageControl from the URI passed to it and insert it into a host element. Because the resulting PageControl contains an error, the returned promise errors out. To trap the error raised during navigation, I add an error handler to the onError parameter of the then method exposed by that Promise object. This effectively traps the error, preventing it from raising the Application.onerror event.

Figure 6 The PageControlNavigator._navigating Function in navigator.js

// Other PageControlNavigator code ...

// Responds to navigation by adding new pages to the DOM.

_navigating: function (args) {

var newElement = this._createPageElement();

this._element.appendChild(newElement);

this._lastNavigationPromise.cancel();

var that = this;

this._lastNavigationPromise = WinJS.Promise.as().then(function () {

return WinJS.UI.Pages.render(args.detail.location, newElement,

args.detail.state);

}).then(function parentElement(control) {

var oldElement = that.pageElement;

// Cleanup and remove previous element

if (oldElement.winControl) {

if (oldElement.winControl.unload) {

oldElement.winControl.unload();

}

oldElement.winControl.dispose();

}

oldElement.parentNode.removeChild(oldElement);

oldElement.innerText = "";

},

// Display any errors raised by a page control,

// clear the backstack, and cancel the error.

function (err) {

var messageDialog =

new Windows.UI.Popups.MessageDialog(

err.message,

"Sorry, can't navigate to that page.");

messageDialog.showAsync()

nav.history.backStack.pop();

return true;

});

args.detail.setPromise(this._lastNavigationPromise);

},

// Other PageControlNavigator code ...

Promises in WinJS

Creating promises and chaining promises—and the best practices for doing so—have been covered in many other places, so I’ll skip that discussion in this article. If you need more information, check out the blog post by Kraig Brockschmidt at bit.ly/1cgMAnu or Appendix A in his free e-book, “Programming Windows Store Apps with HTML, CSS, and JavaScript, Second Edition” (bit.ly/1dZwW1k).

Note that it’s entirely proper to modify navigator.js. Although it’s provided by the Visual Studio project template, it’s part of your app’s code and can be modified however you need.

In the _navigating function, I’ve added an error handler to the final promise.then call. The error handler shows a message dialog—as with the application-level error handling—and then cancels the error by returning true. It also removes the page from the navigation history.

When I run the sample again and navigate to handlingErrors.html, I see the message dialog that informs me the navigation attempt has failed. The message dialog from the application-level error handling doesn’t appear.

Tracking Down Errors in Asynchronous Chains

When building apps in JavaScript, I frequently need to follow one asynchronous task with another, which I address by creating promise chains. Chained promises will continue moving along through the tasks, even if one of the promises in the chain returns an error. A best practice is to always end a chain of promises with a call to the done method. The done function throws any errors that would’ve been caught in the error handler for any previous then statements. This means I don’t need to define error functions for each promise in a chain.

Even so, tracking errors down can be difficult in very long chains once they’re trapped in the call to promise.done. Chained promises can include multiple tasks, and any one of them could fail. I could set a breakpoint in every task to see where the error pops up, but that would be terribly time-consuming.

Here’s where Visual Studio 2013 comes to the rescue. The Tasks window (introduced in Visual Studio 2010) has been upgraded to handle asynchronous JavaScript debugging as well. In the Tasks window you can view all active and completed tasks at any given point in your app code.

For this next example, I’ll add a new page to the solution to demonstrate this awesome tool. In the solution, I create a new folder called chainedAsync in the pages folder and then add a new Page Control named chainAsync.html (which creates /pages/chainedAsync/chainedAsync.html and associated .js and .css files).

In chainedAsync.html, I insert the following markup within the <section> tags:

<!-- ERROR:Clicking this button starts a chain reaction with an error. -->

<p><button id="startChain">Start the error chain</button></p>

<p id="output"></p>

In chainedAsync.js, I add the event handler shown in Figure 7for the click event of the startChain button to the ready method for the page.

Figure 7 The Contents of the PageControl.ready Function in chainedAsync.js

startChain.addEventListener("click", function () {

goodPromise().

then(function () {

return goodPromise();

}).

then(function () {

return badPromise();

}).

then(function () {

return goodPromise();

}).

done(function () {

// This *shouldn't* get called

},

function (err) {

document.getElementById('output').innerText = err.toString();

});

});

Last, I define the functions goodPromise and badPromise, shown in Figure 8, within chainAsync.js so they’re available inside the PageControl’s methods.

Figure 8 The Definitions of the goodPromise and badPromise Functions in chainAsync.js

function goodPromise() {

return new WinJS.Promise(function (comp, err, prog) {

try {

comp();

} catch (ex) {

err(ex)

}

});

}

// ERROR: This returns an errored-out promise.

function badPromise() {

return WinJS.Promise.wrapError("I broke my promise :(");

}

I run the sample again, navigate to the “Chained asynchronous” page, and then click “Start the error chain.” After a short wait, the message “I broke my promise :(” appears below the button.

Now I need to track down where that error occurred and figure out how to fix it. (Obviously, in a contrived situation like this, I know exactly where the error occurred. For learning purposes, I’ll forget that badPromise injected the error into my chained promises.)

To figure out where the chained promises go awry, I’m going to place a breakpoint on the error handler defined in the call to done in the click handler for the startChain button, as shown in Figure 9.

Figure 9 The Position of the Breakpoint in chainedAsync.html

I run the same test again, and when I return to Visual Studio, the program execution has stopped on the breakpoint. Next, I open the Tasks window (Debug | Windows | Tasks) to see what tasks are currently active. The results are shown in Figure 10.

Figure 10 The Tasks Window in Visual Studio 2013 Showing the Error

At first, nothing in this window really stands out as having caused the error. The window lists five tasks, all of which are marked as active. As I take a closer look, however, I see that one of the active tasks is the Scheduler queuing up promise errors—and that looks promising (please excuse the bad pun).

(If you’re wondering about the Scheduler, I encourage you to read the next article in this series, where I’ll discuss the new Scheduler API in WinJS.)

When I hover my mouse over that row (ID 120 in Figure 10) in the Tasks window, I get a targeted view of the call stack for that task. I see several error handlers and, lo and behold, badPromise is near the beginning of that call stack. When I double-click that row, Visual Studio takes me right to the line of code in badPromise that raised the error. In a real-world scenario, I’d now diagnose why badPromise was raising an error.

WinJS provides several levels of error handling in an app, above and beyond the reliable try-catch-finally block. A well-performing app should use an appropriate degree of error handling to provide a smooth experience for users. In this article, I demonstrated how to incorporate app-level, page-level and navigation-level error handling into an app. I also demonstrated how to use some of the new tools in Visual Studio 2013 to track down errors in chained promises.

Create new SharePoint 2013 empty project

Create new SharePoint 2013 empty project Deploy the SharePoint site as a farm solution

Deploy the SharePoint site as a farm solution SharePoint solution items

SharePoint solution items Adding new item to SharePoint solution

Adding new item to SharePoint solution Adding new item of type site column to the solution

Adding new item of type site column to the solution Collapse | Copy Code

Collapse | Copy Code Adding new item of type Content Type to SharePoint solution

Adding new item of type Content Type to SharePoint solution Specifying the base type of the content type

Specifying the base type of the content type Adding columns to the content type

Adding columns to the content type Adding new module to SharePoint solution.

Adding new module to SharePoint solution.

Adding existing content type to the pages library

Adding existing content type to the pages library Selecting the content type to add it to pages library

Selecting the content type to add it to pages library Adding new document of the news content type to pages library

Adding new document of the news content type to pages library Creating new page of news content type to pages library.

Creating new page of news content type to pages library.

![micorosftazurelogo[1]](https://sharepointsamurai.files.wordpress.com/2014/08/micorosftazurelogo1.jpg?w=300&h=150)

![DuetEnterpriseDesign[1]](https://sharepointsamurai.files.wordpress.com/2014/10/duetenterprisedesign1.jpg?w=479&h=238)

Input the site URL which we need to open:

Input the site URL which we need to open: Enter your site credentials here:

Enter your site credentials here: Now we need to create the new external content type and here we have the options for changing the name of the content type and creating the connection for external data source:

Now we need to create the new external content type and here we have the options for changing the name of the content type and creating the connection for external data source: And click on the hyperlink text “Click here to discover the external data source operations, now this window will open:

And click on the hyperlink text “Click here to discover the external data source operations, now this window will open: Click on the “Add Connection “button, we can create a new connection. Here we have the different options to select .NET Type, SQL Server, WCF Service.

Click on the “Add Connection “button, we can create a new connection. Here we have the different options to select .NET Type, SQL Server, WCF Service. Here we selected SQL server, now we need to provide the Server credentials:

Here we selected SQL server, now we need to provide the Server credentials: In this screen, we have the options for creating different types of operations against the database:

In this screen, we have the options for creating different types of operations against the database: Click on the next button:

Click on the next button: Parameters Configurations:

Parameters Configurations: Options for Filter parameters Configuration:

Options for Filter parameters Configuration: Here we need to add new External List, Click on the “External List”:

Here we need to add new External List, Click on the “External List”: Select the Site here and click ok button:

Select the Site here and click ok button: Enter the list name here and click ok button:

Enter the list name here and click ok button: After that, refresh the SharePoint site, we can see the external list here and click on the list:

After that, refresh the SharePoint site, we can see the external list here and click on the list: Here we have the error message “Access denied by Business Connectivity.”

Here we have the error message “Access denied by Business Connectivity.” Click on the Business Data Connectivity Service:

Click on the Business Data Connectivity Service: Set the permission for this list:

Set the permission for this list: Click ok after setting the permissions:

Click ok after setting the permissions: After that, refresh the site and hope this will work… but again, it has a problem. The error message like Login failed for user “NT AUTHORITY\ANONYMOUS LOGON”.

After that, refresh the site and hope this will work… but again, it has a problem. The error message like Login failed for user “NT AUTHORITY\ANONYMOUS LOGON”. Then follow the below mentioned steps.

Then follow the below mentioned steps. And there is a chance for one more error like:

And there is a chance for one more error like: After clicking the Ok button, Visual Studio generates a solution with two projects, one Silverlight 4 project and one ASP.NET project. In the next section, we will create the SAP data access layer using the LINQ to SAP designer.

After clicking the Ok button, Visual Studio generates a solution with two projects, one Silverlight 4 project and one ASP.NET project. In the next section, we will create the SAP data access layer using the LINQ to SAP designer. In the above function dialog, change the method name to

In the above function dialog, change the method name to  After clicking the Ok button and saving the ERP file, the LINQ designer will generate a

After clicking the Ok button and saving the ERP file, the LINQ designer will generate a  Next, we add the service operation

Next, we add the service operation ![4214.TFS_2D00_LicenseOverview[1]](https://sharepointsamurai.files.wordpress.com/2014/10/4214-tfs_2d00_licenseoverview1.jpg?w=602&h=378)

![ppt_img[1]](https://sharepointsamurai.files.wordpress.com/2014/10/ppt_img1.gif?w=300&h=225)

![sap_integration_en_round[1]](https://sharepointsamurai.files.wordpress.com/2014/09/sap_integration_en_round1.jpg?w=300&h=155)

Note

Note![sap_integration_en_round[2]](https://sharepointsamurai.files.wordpress.com/2014/09/sap_integration_en_round2.jpg?w=300&h=155)

Important

Important

![microsoft-silverlight[1]](https://sharepointsamurai.files.wordpress.com/2014/09/microsoft-silverlight1.jpg?w=300&h=225)

![microsoft-silverlight2-developer-reference[1]](https://sharepointsamurai.files.wordpress.com/2014/09/microsoft-silverlight2-developer-reference1.jpg?w=597&h=372)

![Windows_Azure_Wallpaper_p754[1]](https://sharepointsamurai.files.wordpress.com/2014/09/windows_azure_wallpaper_p7541.jpg?w=300&h=225)

![office365logoorange_web[1]](https://sharepointsamurai.files.wordpress.com/2014/09/office365logoorange_web1.png?w=300&h=194)

![DevOps-Cloud[1]](https://sharepointsamurai.files.wordpress.com/2014/09/devops-cloud1.jpg?w=300&h=188)

![4503.DevOps_2D00_Barriers_5F00_1C41B571[1]](https://sharepointsamurai.files.wordpress.com/2014/09/4503-devops_2d00_barriers_5f00_1c41b5711.png?w=502&h=278)

![devops[1]](https://sharepointsamurai.files.wordpress.com/2014/09/devops1.png?w=300&h=241)

![DevOps-Lifecycle[1]](https://sharepointsamurai.files.wordpress.com/2014/09/devops-lifecycle1.png?w=536&h=193)

![slide5[1]](https://sharepointsamurai.files.wordpress.com/2014/09/slide51.jpg?w=474)

Tip

Tip

DNS is a huge part of the inner workings of the internet. spend a considerable amount of man hours a year ensuring the sites they build are fast and respond well to user interaction by setting up expensive CDN’s, recompressing images, minifying script files and much more – but what a lot of us don’t understand is that DNS server configuration can make a big difference to the speed of your site – hopefully at the end of this post you’ll feel empowered to get the most out of this part of your website’s configuration.

DNS is a huge part of the inner workings of the internet. spend a considerable amount of man hours a year ensuring the sites they build are fast and respond well to user interaction by setting up expensive CDN’s, recompressing images, minifying script files and much more – but what a lot of us don’t understand is that DNS server configuration can make a big difference to the speed of your site – hopefully at the end of this post you’ll feel empowered to get the most out of this part of your website’s configuration.

![SharePointOnline2L-1[1]](https://sharepointsamurai.files.wordpress.com/2014/09/sharepointonline2l-11.png?w=300&h=108)

![SharePointOnline2L-1[1]](https://sharepointsamurai.files.wordpress.com/2014/09/sharepointonline2l-111.png?w=415&h=161)

![bottlenecks[1]](https://sharepointsamurai.files.wordpress.com/2014/09/bottlenecks1.jpg?w=300&h=196)

![kaizen%20not%20kaizan[1]](https://sharepointsamurai.files.wordpress.com/2014/09/kaizen20not20kaizan1.png?w=300&h=289)

![IC648720[1]](https://sharepointsamurai.files.wordpress.com/2014/09/ic6487201.gif?w=300&h=181)

![taking-your-version-control-to-a-next-level-with-tfs-and-git-1-638[1]](https://sharepointsamurai.files.wordpress.com/2014/09/taking-your-version-control-to-a-next-level-with-tfs-and-git-1-6381.jpg?w=300&h=168)

![2352.vs_5F00_heart_5F00_git_5F00_thumb_5F00_66558A9C[1]](https://sharepointsamurai.files.wordpress.com/2014/09/2352-vs_5f00_heart_5f00_git_5f00_thumb_5f00_66558a9c1.png?w=300&h=113)

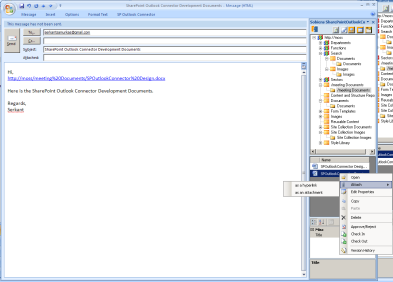

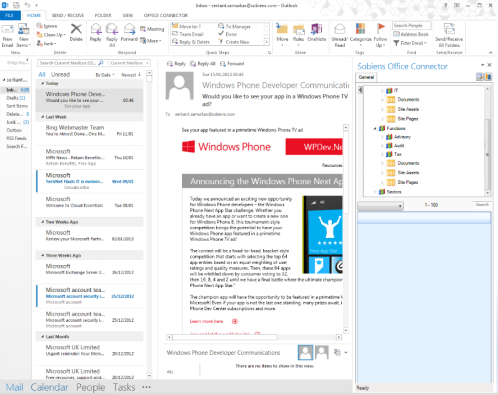

One of the key methods of gaining User Adoption of SharePoint is ensuring and pushing the integration it has with Microsoft Office to information workers. After all, information workers generally use Outlook as their ‘mother-ship’. Getting those users to switch immediately to SharePoint or, asking them to visit a document library in a SharePoint site which they will need to access could take time, especially since it means opening a browser, navigating to the site, covering their beloved Outlook client in the process.

One of the key methods of gaining User Adoption of SharePoint is ensuring and pushing the integration it has with Microsoft Office to information workers. After all, information workers generally use Outlook as their ‘mother-ship’. Getting those users to switch immediately to SharePoint or, asking them to visit a document library in a SharePoint site which they will need to access could take time, especially since it means opening a browser, navigating to the site, covering their beloved Outlook client in the process.

![IC630180[1]](https://sharepointsamurai.files.wordpress.com/2014/09/ic6301801.gif?w=300&h=90)

![2014-04-17_1958[1]](https://sharepointsamurai.files.wordpress.com/2014/08/2014-04-17_19581.png?w=495&h=316)

![code_refactoring[1]](https://sharepointsamurai.files.wordpress.com/2014/08/code_refactoring1.jpg?w=474)

![micorosftazurelogo[1]](https://sharepointsamurai.files.wordpress.com/2014/08/micorosftazurelogo1.jpg?w=266&h=138)

project acts as a deployment project, listing which roles are included in the cloud service, along with the definition and configuration files. It provides Azure-specific run, debug and publish functionality.

project acts as a deployment project, listing which roles are included in the cloud service, along with the definition and configuration files. It provides Azure-specific run, debug and publish functionality.

![SharePointOnline2L-1[2]](https://sharepointsamurai.files.wordpress.com/2014/08/sharepointonline2l-12.png?w=300&h=108)

![BI-infographic1[1]](https://sharepointsamurai.files.wordpress.com/2014/08/bi-infographic11.jpg?w=300&h=184)

![Site-Definition-03.png_2D00_700x0[1]](https://sharepointsamurai.files.wordpress.com/2014/08/site-definition-03_2d00_700x01.png?w=474)

![winjs_life_02[1]](https://sharepointsamurai.files.wordpress.com/2014/07/winjs_life_021.png?w=474)

![biztalk_adapter_2004-1[1]](https://sharepointsamurai.files.wordpress.com/2014/07/biztalk_adapter_2004-11.jpg?w=474&h=364)

Note

Note

Because you have all the components of BizTalk Adapter Service installed on the same computer, the URL for that service will be

Because you have all the components of BizTalk Adapter Service installed on the same computer, the URL for that service will be  The Add a Target wizard starts. Perform the following steps to create an LOB Target.

The Add a Target wizard starts. Perform the following steps to create an LOB Target. Click Next.

Click Next. Click OK. The schemas are added to the project under an LOB Schemas folder.

Click OK. The schemas are added to the project under an LOB Schemas folder. ] to open the Message Type Picker dialog box.

] to open the Message Type Picker dialog box. ], and then click OK. For this tutorial, select the Send schema (

], and then click OK. For this tutorial, select the Send schema (

Important

Important

![8322.sharepoint_2D00_2010_5F00_4855E582[1]](https://sharepointsamurai.files.wordpress.com/2014/07/8322-sharepoint_2d00_2010_5f00_4855e5821.jpg?w=474&h=261)

Tip:

Tip:  Note:

Note: