This weekend was spent completing my new “List Search Web Part” and also 2 Free Web Parts that is included in the “List Web Part Pack” – More about this in my future blog.

In between the “SAP Bug” bit me again and I decided to write a blog post series on the various adapters I have used in SharePoint and SAP Integration Projects and to give you a basic “run down” of how and with which technologies each adapter connects the 2 systems with.

ERPConnect was 1st on the list. ….

Yes, I can hear the grumblings of those of us who have worked with SAP and SharePoint Integration and the ERPConnect adapter before 🙂

For starters, you need to have a SAP Developer Key to be allowed to use the SAP web service wizard, and also have the required SAP authorizations. In other cases it may not be allowed by IT operations to make any modification to the SAP environment, even if it’s limited to the full-automatic generation and activation of the BAPI webservice(s).

Another reason from a system architecture viewpoint, is that the single BAPI and/or RFC calls may be of too low granularity. You actually want to perform a ‘business transaction’, consisting of multiple method invocations which must be treated as a Logical Unit of Work (LUW). SAP has introduced the concept of SAP Enterprise Services for this, and has delivered a first set of them. This is by far not complete yet, and SAP will augment it the coming years.

SharePoint 2010 provides developer with the capability to integrate external data sources like SAP business data via the Business Connectivity Services (BCS) into the SharePoint system. The concept of BCS is based on entities and associated stereotyped operations. This perfectly suits for flat and simple structured data sets like SAP tables.

Another and way more flexible option to use SAP data in SharePoint are the ERPConnect Services for SharePoint 2010 (ECS). The product suite consists of three product components: ERPConnect Services runtime, the BCS Connector application and the Xtract PPS for PerformancePoint Services.

The runtime is providing a Service Application that integrates itself with the new service architecture of SharePoint 2010. The runtime offers a secure middle-tier layer to integrate different kind of SAP objects in your SharePoint applications, like tables and function modules.

The BCS Connector application allows developers to create BDC models for the BCS Services, without programming knowledge. You may export the BDC models created by the BCS Connector to Visual Studio 2010 for further customizing.

The Xtract PPS component offers a SAP data source provider for the PerformancePoint Services of SharePoint 2010. T

his article gives you an overview of the ERPConnect Services runtime and shows how you can create and incorporate business data from SAP in different SharePoint application types, like Web Parts, Application Pages or Silverlight modules.

This article does not introduce the other components.

Background

This section will give you a short explanation and background of SAP objects that can be used in ERPConnect Services. The most important objects are SAP tables and function modules. A function module is basically similar to a normal procedure in conventional programming languages. Function modules are written in ABAP, the SAP programming language, and are accessible from any other programs within a SAP system. They accept import and export parameters as well as other kind of special parameters.

In addition, BAPIs (Business-API) are special function modules that are organized within the SAP Business Object Repository. In order to use function modules with the runtime they must be marked as Remote (RFC). SAP table data can also be retrieved. Tables in SAP are basically relational database tables. Others SAP objects like BW Cubes or SAP Queries can be accessed via the XtractQL query language (see below).

Installation & Configuration

Installing ERPConnect Services on a SharePoint 2010 server is done by an installer and is straight forward. The SharePoint Administration Service must run on the local server (see Windows Services).

For more information see product documentation. After the installation has been successfully processed navigate to the Service Applications screen within the central administration (CA) of SharePoint:

Before creating your first Service Application a Secure Store must be created, where ERPConnect Services will save SAP user credentials. In the settings page for the “Secure Store Service” create a new Target Application and name the application “ERPConnect Services”. Click on the button “Next” to define the store fields as follows:

Finish the creation process by clicking on “Next” and define application administrators. Then, mark the application, click “Set Credentials” and enter the SAP user credentials:

Let’s go on and create a new ERPConnect Service Application!

Click the “ERPConnect Service Application” link in the “New” menu of the Service Applications page (see also first screenshot above). This opens a dialog to define the name of the service application, the SAP connection data and the IIS application pool:

Click “Create” after entering all data and you will see the following entries in the Service Applications screen:

That’s it! You are now done setting up your first ERPConnect Service Application.

Development

The runtime functionality covers different programming demands such as generically retrievable interface functions. The service applications are managed by the Central Administration of SharePoint. The following service and function areas are provided:

- Executing and retrieving data directly from SAP tables

- Executing SAP function modules / BAPIs

- Executing XtractQL query statements

The next sections shows how to use these service and function areas and access different SAP objects from within your custom SharePoint applications using the ERPConnect Services. The runtime can be used in applications within the SharePoint context like Web Parts or Application Pages.

In order to do so, you need to reference the assembly i in the project. Before you can access data from the SAP system you must create an instance of the ERPConnectServiceClient class. This is the gate to all SAP objects and the generic API of the runtime in overall. In the SharePoint context there are two options to create a client object instance:

ERPConnectServiceClient client = new ERPConnectServiceClient();

ERPConnectServiceApplicationProxy proxy = SPServiceContext.Current.GetDefaultProxy(

typeof(ERPConnectServiceApplicationProxy)) as ERPConnectServiceApplicationProxy;

ERPConnectServiceClient client = proxy.GetClient();

For more details on using ECS in Silverlight or desktop applications see the specific sections below.

Querying Tables

Querying and retrieving table data is a common task for developers. The runtime allows retrieving data directly from SAP tables. The ERPConnectServiceClient class provides a method called ExecuteTableQuery with two overrides which query SAP tables in a simple way.

The method also supports a way to pass miscellaneous parameters like row count and skip, custom function, where clause definition and a returning field list. These parameters can be defined by using the ExecuteTableQuerySettings class instance.

DataTable dt = client.ExecuteTableQuery("T001");

…

ExecuteTableQuerySettings settings = new ExecuteTableQuerySettings {

RowCount = 100,

WhereClause = "ORT01 = 'Paris' AND LAND1 = 'FR'",

Fields = new ERPCollection<string> { "BUKRS", "BUTXT", "ORT01", "LAND1" }

};

DataTable dt = client.ExecuteTableQuery("T001", settings);

…

DataTable dt = client.ExecuteTableQuery("MAKT",

new ExecuteTableQuerySettings {

RowCount = 10,

WhereClause = "MATNR = '60-100C'",

OrderClause = "SPRAS DESC"

});

The first query reads all records from the SAP table T001 where the fields ORT01 equals Paris and LAND1 equals FR (France). The query returns the top 100 records and the result set contains only the fields BUKRS, BUTXT, ORT01 and LAND1.

The second query returns the top ten records of the SAP table MAKT, where the field MATNR equals the material number 60-100C. The result set is ordered by the field SPRAS.

Executing Function Modules

In addition to query SAP tables the runtime API executes SAP function modules (BAPIs). Function modules must be marked as remote-enabled modules (RFC) within SAP.

The ERPConnectServiceClient class provides a method called CreateFunction to create a structure of metadata for the function module. The method returns an instance of the data structure ERPFunction. This object instance contains all parameters types (import, export, changing and tables) that can be used with function modules.

In the sample below we call the function SD_RFC_CUSTOMER_GET and pass a name pattern (T*) for the export parameter with name NAME1. Then we call the Execute method on the ERPFunction instance. Once the method has been executed the data structure is updated. The function returns all customers in the table CUSTOMER_T.

ERPFunction function = client.CreateFunction("SD_RFC_CUSTOMER_GET");

function.Exports["NAME1"].ParamValue = "T*";

function.Execute();

foreach(ERPStructure row in function.Tables["CUSTOMER_T"])

Console.WriteLine(row["NAME1"] + ", " + row["ORT01"]);

The following code shows an additional sample. Before we can execute this function module we need to define a table with HR data as input parameter.

The parameters you need and what values the function module is returning dependents on the implementation of the function module

ERPFunction function = client.CreateFunction("BAPI_CATIMESHEETMGR_INSERT");

function.Exports["PROFILE"].ParamValue = "TEST";

function.Exports["TESTRUN"].ParamValue = "X";

ERPTable records = function.Tables["CATSRECORDS_IN"];

ERPStructure r1 = records.AddRow();

r1["EMPLOYEENUMBER"] = "100096";

r1["WORKDATE"] = "20110704";

r1["ABS_ATT_TYPE"] = "0001";

r1["CATSHOURS"] = (decimal)8.0;

r1["UNIT"] = "H";

function.Execute();

ERPTable ret = function.Tables["RETURN"];

foreach(var i in ret)

Console.WriteLine("{0} - {1}", i["TYPE"], i["MESSAGE"]);

Executing XtractQL Query Statements

The ECS runtime is offering a SAP query language called XtractQL. The XtractQL query language, also known as XQL, consists of ABAP and SQL syntax elements. XtractQL allows querying SAP tables, BW-Cubes, SAP Queries and executing function modules.

It’s possible to return metadata for the objects and MDX statements can also be executed with XQL. All XQL queries are returning a data table object as result set. In case of the execution of function modules the caller must define the returning table (see sample below – INTO @RETVAL). XQL is very useful in situations where you need to handle dynamic statements. The following list shows a

SELECT TOP 5 * FROM T001W WHERE FABKL = 'US'

This query selects the top 5 records of the SAP table T001W where the field FABKL equals the value US.

SELECT * FROM MARA WITH-OPTIONS(CUSTOMFUNCTIONNAME = 'Z_XTRACT_IS_TABLE')

SELECT MAKTX AS [ShortDesc], MANDT, SPRAS AS Language FROM MAKT

This query selects all records of the SAP table MAKT. The result set will contains three fields named ShortDesc, MANDT and Language.

EXECUTE FUNCTION 'SD_RFC_CUSTOMER_GET'

EXPORTS KUNNR='0000003340'

TABLES CUSTOMER_T INTO @RETVAL;

This query executes the SAP function module SD_RFC_CUSTOMER_GET and returns as result the table CUSTOMER_T (defined as @RETVAL).

DESCRIBE FUNCTION 'SD_RFC_CUSTOMER_GET' GET EXPORTS

This query returns metadata about the export parameters of the

SELECT TOP 30 LIPS-LFIMG, LIPS-MATNR, TEXT_LIKP_KUNNR AS CustomerID

FROM QUERY 'S|ZTHEO02|ZLIKP'

WHERE SP$00002 BT '0080011000'AND '0080011999'

This statement executes the SAP Query “S|ZTHEO02|ZLIKP” (name includes the workspace, user group and the query name). As you can see XtractQL extends the SQL syntax with ABAP or SAP specific syntax elements. This way you can define fields using the LIPS-MATNR format and SAP-like where clauses like “SP$00002 BT ‘0080011000’AND ‘0080011999’”.

ERPConnect Services provides a little helper tool, the XtractQL Explorer (see screenshot below), to learn more about the query language and to test XQL queries. You can use this tool independent of SharePoint, but you need access to a SAP system.

To find out more about all XtractQL language syntax see the product manual.

Silverlight And Desktop Applications

So far all samples are using the assembly ERPConnectServices.Server.Common.dll as project reference and all code snippets shown run within the SharePoint context, e.g. Web Part.

ERPConnect Services also provides client libraries for Silverlight and desktop applications:

ERPConnectServices.Client.dll for Desktop applications

ERPConnectServices.Client.Silverlight.dll for Silverlight applications

You need to add the references depending what project you are implementing.

In Silverlight the implementation and design pattern is a little bit more complicated, since all web services will be called in asynchronously. It’s also not possible to use the DataTable class. It’s just not implemented for Silverlight.

The runtime provides a similar class called ERPDataTable, which is used in this cases by the API. The ERPConnectServiceClient class for Silverlight provides the method ExecuteTableQueryAsync and an event called ExecuteTableQueryCompleted as callback delegate.

public event EventHandler<ExecuteTableQueryCompletedEventArgs> ExecuteTableQueryCompleted;

public void ExecuteTableQueryAsync(string tableName)

public void ExecuteTableQueryAsync(string tableName, ExecuteTableQuerySettings settings)

The following code sample shows a simple query of the SAP table T001 within a Silverlight client.

First of all, an instance of the ERPConnectServiceClient is created using the URI of the ERPConnectService.svc, then a delegate is defined to handle the complete callback. Next, the query is executed, defined with a RowCount equal 10 to only return the top 10 records in the result set.

Once the result is returned the data set will be attached to a DataGrid control (see screenshot below) within the callback method.

void OnGetTableDataButtonClick(object sender, RoutedEventArgs e)

{

ERPConnectServiceClient client = new ERPConnectServiceClient(

new Uri("http://<SERVERNAME>/_vti_bin/ERPConnectService.svc"));

client.ExecuteTableQueryCompleted += OnExecuteTableQueryCompleted;

client.ExecuteTableQueryAsync("T001",

new ExecuteTableQuerySettings { RowCount = 150 });

}

void OnExecuteTableQueryCompleted(object sender, ExecuteTableQueryCompletedEventArgs e)

{

if(e.Error != null)

MessageBox.Show(e.Error.Message);

else

{

e.Table.View.GroupDescriptions.Add(new PropertyGroupDescription("ORT01"));

TableGrid.ItemsSource = e.Table.View;

}

}

The screenshot below shows the XAML of the Silverlight page:

The final result can be seen below:

ECS Designer

ERPConnect Services includes a Visual Studio 2010 plugin, the ECS Designer, that allows developer to visually design SAP interfaces. It’s working similar to the LINQ to SAP Designer I have written about a while ago, see article at CodeProject: LINQ to SAP.

The ECS Designer is not automatically installed once you install the product. You need to call the installation program manually. The setup adds a new project item type to Visual Studio 2010 with the file extension .ecs and is linking it with the designer. The needed references are added automatically after adding an ECS project item.

The designer generates source code to integrate with the ERPConnect Services runtime after the project item is saved. The generated context class contains methods and sub-classes that represent the defined SAP objects (see screenshots below).

Before you access the SAP system for the first time you will be asked to enter the connection data. You may also load the connection data from SharePoint system. The designer GUI is shown in the screenshots below:

The screenshot above for instance shows the tables dialog. After clicking the Add (+) button in the main designer screen and searching a SAP table in the search dialog, the designer opens the tables dialog.

In this dialog you can change the name of the generated class, the class modifier and all needed properties (fields) the final class should contain.

To preview your selection press the Preview button. The next screenshot shows the automatically generated classes in the file named EC1.Designer.cs:

Using the generated code is simple. The project type we are using for this sample is a standard console application, therefore the designer is referencing the ERPConnectServices.Client.dll for desktop applications.

Since we are not within the SharePoint context, we have to define the URI of the SharePoint system by passing this value into the constructor of the ERPConnectServicesContext class.

The designer has generated class MAKT and an access property MAKTList for the context class of the table MAKT. The type of this property MAKTList is ERPTableQuery<MAKT>, which is a LINQ queryable data type.

This means you can use LINQ statements to define the underlying query. Internally, the ERPTableQuery<T> type will translate your LINQ query into call of ExecuteTableQuery.

That’s it!

Advanced Techniques

There are situations when you have to use the exact same SAP connection while calling a series of function modules in order to receive the correct result. Let’s take the following code:

ERPConnectServiceClient client = new ERPConnectServiceClient();

using(client.BeginConnectionScope())

{

ERPFunction f = client.CreateFunction("BAPI_GOODSMVT_CREATE");

ERPStructure s = f.Exports["GOODSMVT_HEADER"].ToStructure();

s["PSTNG_DATE"] = "20110609"; s["PR_UNAME"] = "BAEURLE"; s["HEADER_TXT"] = "XXX"; s["DOC_DATE"] = "20110609";

f.Exports["GOODSMVT_CODE"].ToStructure()["GM_CODE"] = "01";

ERPStructure r = f.Tables["GOODSMVT_ITEM"].AddRow();

r["PLANT"] = "1000"; r["PO_NUMBER"] = "4500017210"; r["PO_ITEM"] = "010"; r["ENTRY_QNT"] = 1; r["MOVE_TYPE"] = "101"; r["MVT_IND"] = "B"; r["STGE_LOC"] = "0001";

f.Execute();

string matDocument = f.Imports["MATERIALDOCUMENT"].ParamValue as string;

string matDocumentYear = f.Imports["MATDOCUMENTYEAR"].ParamValue as string;

ERPTable ret = f.Tables["RETURN"];

foreach(var i in ret)

Console.WriteLine("{0} - {1}", i["TYPE"], i["MESSAGE"]);

ERPFunction fCommit = client.CreateFunction("BAPI_TRANSACTION_COMMIT");

fCommit.Exports["WAIT"].ParamValue = "X";

fCommit.Execute();

}

In this sample we create a goods receipt for a goods movement with BAPI_GOODSMVT_CREATE. The final call to BAPI_TRANSACTION_COMMIT will only work, if the system under the hood is using the same connection object.

The runtime is not providing direct access to the underlying SAP connection, but the library offers a mechanism called connection scoping. You may create a new connection scope with the client library and telling ECS to use the same SAP connection until you close the connection scope. Within the connection scope every library call will use the same SAP connection.

In order to create a new connection scope you need to call the BeginConnectionScope method of the class ERPConnectServiceClient.

The method returns an IDisposable object, which can be used in conjunction with the using statement of C# to end the connection scope.

Alternatively, you may call the EndConnectionScope method. It’s also possible to use function modules with nested structures as parameters.

This is a special construct of SAP. The goods receipt sample above is using a nested structure for the export parameter GOODSMVT_CODE. For more detailed information about nested structures and tables see the product documentation.

![ppt_img[1]](https://sharepointsamurai.files.wordpress.com/2014/10/ppt_img1.gif?w=300&h=225)

![sap_integration_en_round[1]](https://sharepointsamurai.files.wordpress.com/2014/09/sap_integration_en_round1.jpg?w=300&h=155)

Note

Note

![Site-Definition-03.png_2D00_700x0[1]](https://sharepointsamurai.files.wordpress.com/2014/08/site-definition-03_2d00_700x01.png?w=474)

Note

Note Caution

Caution![8322.sharepoint_2D00_2010_5F00_4855E582[1]](https://sharepointsamurai.files.wordpress.com/2014/07/8322-sharepoint_2d00_2010_5f00_4855e5821.jpg?w=474&h=261)

Tip:

Tip:  Note:

Note:

![activity diagram uml+activity+diagram+library+mgmt+book+return[1]](https://sharepointsamurai.files.wordpress.com/2014/06/umlactivitydiagramlibrarymgmtbookreturn1-e1403235154656.jpg?w=474)

![ImageGen[1]](https://sharepointsamurai.files.wordpress.com/2014/06/imagegen1.png?w=474)

+

+ ![microsoft_crm_wallpaper_02[1]](https://sharepointsamurai.files.wordpress.com/2014/06/microsoft_crm_wallpaper_021.jpg?w=167&h=125)

![ImageGen[1]](https://sharepointsamurai.files.wordpress.com/2014/06/imagegen1.png?w=168&h=174)

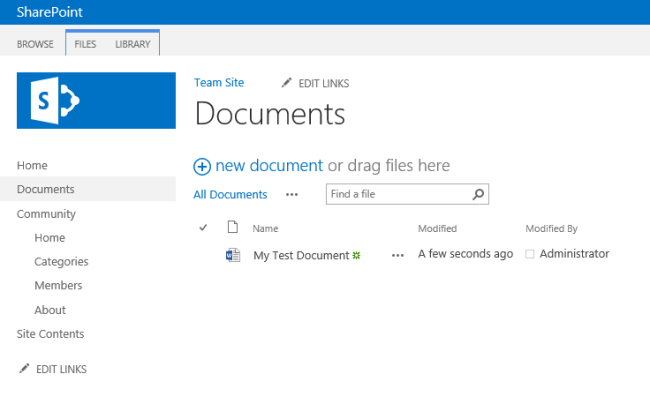

One of the core concepts of Business Connectivity Services (BCS) for

One of the core concepts of Business Connectivity Services (BCS) for

![sap2[1]](https://sharepointsamurai.files.wordpress.com/2014/05/sap21.jpg?w=300&h=148)

![designmanager[1]](https://sharepointsamurai.files.wordpress.com/2014/03/designmanager1.png?w=300&h=169)

![4336.SP2013SearchArchitecture[1]](https://sharepointsamurai.files.wordpress.com/2014/03/4336-sp2013searcharchitecture1.png?w=300&h=168)

.

.