This section contains a set of four Visual How Tos that shows how to develop a real provider for the Microsoft Outlook Social Connector (OSC) by using the OSC Provider Proxy Library.

![Outlook.com_[1]](https://sharepointsamurai.files.wordpress.com/2014/04/outlook-com_1.jpg?w=300&h=189)

An OSC provider allows Outlook users to view, in the People Pane, an aggregation of social information updates that are applied on a professional or social network site. An OSC provider is a Component Object Model (COM) DLL. The OSC provider extensibility interfaces form the medium through which the OSC and an OSC provider communicate. OSC provider extensibility consists of a set of interfaces that is available as an open platform. These interfaces allow the OSC to access social network data in a way that is independent of the APIs of each social network. An OSC provider obtains social network data from the corresponding social network and, through implementing the extensibility interfaces, feeds that social network data to the OSC.

The OSC Provider Proxy Library simplifies the implementation of the OSC provider extensibility interfaces. Instead of a provider explicitly implementing the OSC provider extensibility interfaces, the proxy library implements them, to call a set of abstract and virtual methods in the proxy library.

A provider, in turn, overrides this set of abstract and virtual methods with the business logic specific to the social network, to return social network data that the OSC requires.

To show how a provider can use the OSC Provider Proxy Library, this set of Visual How Tos describes a real provider for OfficeTalk. OfficeTalk is a social network in a private corporate environment and is not publicly available.

Nonetheless, it is a good example of the kind of social network that you might want to develop a custom OSC provider for. You can use the procedures for creating the OSC provider for OfficeTalk to create a custom OSC provider for any social network.

Developing a Real Outlook Social Connector Provider by Using a Proxy Library

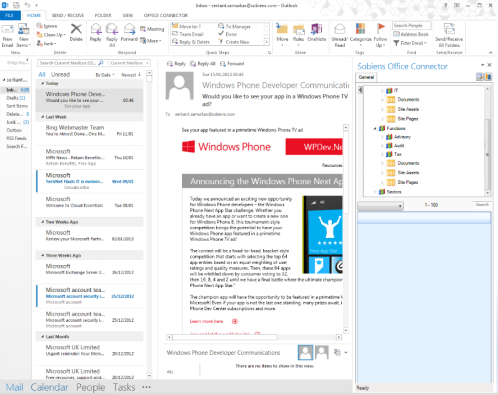

The Microsoft Outlook Social Connector (OSC) provides a communication hub for personal and professional communications. Just by selecting an Outlook item such as an email or meeting request and clicking the sender or a recipient of that item, users can see, in the People Pane, activities, photos, and status updates for the person on their favorite social networks.

The OSC obtains social network data by calling an OSC provider, which behaves like a translation layer between Outlook and the social network. The OSC provider model is open, and you can develop a custom OSC provider by implementing the required OSC provider extensibility interfaces. To retrieve social network data, the OSC makes calls to the OSC provider through these interface members. The OSC provider communicates with the social network and returns the social network data to the OSC as a string or as XML that conforms to the Outlook Social Connector XML schema. Figure 1 shows the various components of the sample OfficeTalk OSC provider reviewed in this Visual How To.

Figure 1. Relationships of the sample OfficeTalk OSC provider with related components

This Visual How To shows the procedures to create a custom OSC provider for OfficeTalk. OfficeTalk is not publicly available and is being used as an example of the kind of social network you might want to develop a custom OSC provider for. You can use the procedures for creating the OSC provider for OfficeTalk to create a custom OSC provider for any social network.

The OfficeTalk provider uses the Outlook Social Connector Provider Proxy Library to simplify the implementation of the OSC provider extensibility interfaces. The OSC Provider Proxy Library implements all of the OSC provider extensibility interface members. These interface members, in turn, call a consolidated set of abstract and virtual methods that provide the social network data that the OSC requires. To create a custom OSC provider that uses the OSC Provider Proxy Library, a developer overrides these abstract and virtual methods with the business logic to communicate with the social network.

The sample solution for this article includes all of the code for a custom OSC provider for OfficeTalk. However, this Visual How To does not show all of the code in the sample solution. Instead, it focuses on creating a custom OSC provider by using the OSC Provider Proxy Library.

The sample solution contains two projects:

- OSCProvider—This project is an unmodified version of the OSC Provider Proxy Library that is used to simplify the creation of the OfficeTalk OSC provider.

- OfficeTalkOSCProvider—This project includes the source code files that are specific to the OfficeTalk OSC provider.

The OfficeTalkOSCProvider project includes the following source code files:

- OfficeTalkHelper—This class contains helper methods that are used throughout the sample solution.

- OTProvider—This is a partial class that contains the OSC Provider Proxy Library override methods that return information about the OSC provider, information about the social network, and information for the current user.

- OTProvider_Activities—This is a partial class that contains the OSC Provider Proxy Library override methods that return activity information.

- OTProvider_Friends—This is a partial class that contains the OSC Provider Proxy Library override methods that return friends information.

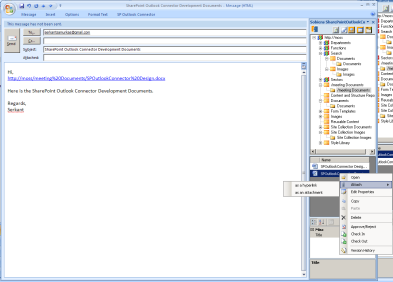

Creating the OfficeTalk OSC Provider Solution

The following sections show the procedures to create the OfficeTalk OSC provider sample solution, and add OSC Provider Proxy Library override methods to return information about the OSC provider, the social network, and the current user.

You must create the OSC provider as a class library. For this Visual How To, the solution was created with a name of OfficeTalkOSCProvider.

Adding the OSC Provider Proxy Library Project

You must download the Outlook Social Connector Provider Proxy Library from MSDN Code Gallery, and then extract it to the local computer.

To add the OSC Provider Proxy Library to the OfficeTalkOSCProvider solution

- Copy the OSCProvider project to the OfficeTalkOSCProvider directory.

- On the File menu in Visual Studio 2010, point to Add, and then click Existing Project.

- Select the OSCPRovider.csproj project that you copied in Step 1.

Adding References

Add the following references to the OfficeTalkOSCProvider:

- Outlook Social Provider COM component. The name in the COM tab is Microsoft Outlook Social Provider Extensibility. If there are multiple versions, select TypeLib Version 1.1.

- System.Drawing

Adding Social Network Specific References and Files

Add other appropriate references and files for the social network. The sample solution does not include the OfficeTalk API assembly. To support the social network for which you are developing an OSC provider, replace the OfficeTalk API references and files with the references and files that are specific to your social network.

The sample solution for OfficeTalk contains the following references and files:

- The OfficeTalk API assembly.

- The OfficeTalk icon file.

Creating a Subclass of the OSC Provider Proxy Library OSCProvider

Use the OSC Provider Proxy Library to create a subclass of the OSCProvider class, OTProvider, which represents the sample OSC provider. Add a class named OTProvider to the OfficeTalkOSCProvider project. OTProvider is defined as a partial class so that logic for OSC provider core methods, friends, and activities can be defined in separate source code files.

Replace the class definition with the code in the following section. The code example starts with the using statements for the OSC Provider Proxy Library and OfficeTalk API. The OTProvider partial class then inherits from the OSCProvider class. Note that the OTProvider class has the ComVisible attribute so that the Outlook Social Connector can call it.

using System;

using System.Globalization;

using System.Collections.Generic;

using System.IO;

using System.Reflection;

using System.Drawing;

using System.Drawing.Imaging;

// Using statements for the OSC Provider Proxy Library.

using OSCProvider;

using OSCProvider.Schema;

// Using statements for the social network.

using OfficeTalkAPI;

namespace OfficeTalkOSCProvider

{

// SubClass of the OSC Provider Proxy Library OSCProvider

// used to create a custom OSC provider.

[System.Runtime.InteropServices.ComVisible(true)]

public partial class OTProvider : OSCProvider.OSCProvider

{

...

After the OTProvider class is defined, add the following code for constants used throughout the OfficeTalkOSCProvider solution.

// Constants for the OfficeTalk OSC provider.

internal static string NETWORK_NAME = @"OfficeTalk";

internal static string NETWORK_GUID = @"YourNetworkGuid";

internal static string API_VERSION = @"YourApiVersion";

internal static string API_URL = @"YourApiUrl";

internal static OSCProvider.ProviderSchemaVersion SCHEMA_VERSION =

ProviderSchemaVersion.v1_1;

Allowing for Debugging

To debug the OfficeTalkOSCProvider, you must modify the OfficeTalkOSCProvider project to start using Outlook and register the OfficeTalkOSCProvider as an Outlook Social Connector.

To set up the OfficeTalkOSCProvider project for debugging

- Right-click the OfficeTalkOSCProvider project, and then click Properties.

- Select the Debug tab.

- Under Start Action, select Start External Program.

- Specify the full path to the version of Outlook that is installed on your computer. The default path for 32-bit Outlook on 32-bit Windows is C:\Program Files\Microsoft Office\Office14\OUTLOOK.EXE.

The Outlook Social Connector will not call the OfficeTalkOSCProvider until it is registered as an OSC provider. The sample solution includes a file named RegisterProvider.reg that updates the registry with the entries that are required to register the OfficeTalkOSCProvider as an OSC provider. You can update the registry by opening the RegistryProvider.reg file in Windows Explorer.

The RegisterProvider.reg file assumes that the sample solution is located in the C:\temp directory. If the sample solution is located in a different directory, update the CodeBase entry in the RegisterProvider.reg file to point to the correct location.

Adding Helper Methods

The OfficeTalkHelper class contains helper methods, including the GetOfficeTalkClient and ConvertUserToPerson methods, that are used throughout the sample solution.

The following GetOfficeTalkClient method returns an OfficeTalkClient object that is used to communicate with OfficeTalk. If the OfficeTalkClient has not been initialized, GetOfficeTalkClient creates and configures a new OfficeTalkClient by using the API_URL and API_VERSION constants that are defined in OTProvider.

// Returns a reference to the OfficeTalk client.

private static OfficeTalkClient officeTalkClient = null;

internal static OfficeTalkClient GetOfficeTalkClient()

{

if (officeTalkClient == null)

{

officeTalkClient =

new OfficeTalkClient(OTProvider.API_URL);

OfficeTalkClient.UserAgent =

@"OfficeTalkOSC/" + OTProvider.API_VERSION;

}

return officeTalkClient;

}

The ConvertUserToPerson method converts an OfficeTalk User object to an OSC Provider Proxy Library Person object that is usable within the OSC Provider Proxy Library. The ConvertUserToPerson method creates a new OSC Provider Proxy Library Person and then maps the User properties to the related Person properties.

// Converts an Office Talk User to an OSC Provider Proxy Library Person.

internal static Person ConvertUserToPerson(OfficeTalkAPI.OTUser user)

{

// Create the OSC Provider Proxy Library Person.

Person person = new Person();

// Map the User properties to the Person properties.

person.FullName = user.name;

person.Email = user.email;

person.Company = user.department;

person.UserID = user.id.ToString(CultureInfo.InvariantCulture);

person.Title = user.title;

person.CreationTime = user.created_atAsDateTime;

// FriendStatus is based on whether the user is being followed

// by the currently logged-on user.

person.FriendStatus =

user.following ? FriendStatus.friend : FriendStatus.notfriend;

// Set the PictureUrl if a profile picture is loaded in OfficeTalk.

if (user.image_url != null)

{

person.PictureUrl = new Uri(OTProvider.API_URL + user.image_url);

}

// WebProfilePage is set to the user's home page in OfficeTalk.

person.WebProfilePage =

OTProvider.API_URL + @"/Home/index/" + user.alias + "#User";

return person;

}

Overriding the GetProviderData Method

The OSC ISocialProvider interface contains members that return information about the OSC provider. This includes the capabilities of the social network, how to communicate with the social network, and general information about the social network. The OSC Provider Proxy Library provides the GetProviderData abstract method, which you can override to return OSC provider information. The GetProviderData abstract method returns the OSC Provider Proxy Library ProviderData object, which encapsulates the provider information.

The following section of the GetProviderData override method initializes a ProviderData object and sets the properties for the OfficeTalk provider.

// The ProviderData contains information about the social network and is

// used by the OSC ISocialProvider members to return information.

ProviderData providerData = new ProviderData();

// Friendly name of the social network to display in Outlook.

providerData.NetworkName = NETWORK_NAME;

// GUID that represents the social network.

// This GUID should not change between versions.

providerData.NetworkGuid = new Guid(NETWORK_GUID);

// Version of the social network provider.

providerData.Version = API_VERSION;

// Array of URLs that the social network provider uses.

// The default URL should be the first item in the array.

providerData.Urls = new string[] { API_URL };

// The icon of the social network to display in Outlook.

Byte[] icon = null;

Assembly assembly = Assembly.GetExecutingAssembly();

using (Stream imageStream =

assembly.GetManifestResourceStream("OfficeTalkOSCProvider.OTIcon16.bmp"))

{

using (MemoryStream memoryStream = new MemoryStream())

{

using (Image socialNetworkIcon = Image.FromStream(imageStream))

{

socialNetworkIcon.Save(memoryStream, ImageFormat.Bmp);

icon = memoryStream.ToArray();

}

}

}

providerData.Icon = icon;

The following section of the GetProviderData override method uses the Proxy Library Capabilities class to identify the capabilities and requirements for the OfficeTalk OSC provider. The Capabilities class defines capabilities by setting the CapabilityFlags property. The CapabiltiesFlag property uses a bitmask and is set by using the bitwise OR operator to combine constants that the OSC Provider Proxy Library has defined for each capability.

// Define the capabilities for the provider.

// The Capabilities object will generate the appropriate XML string.

Capabilities capabilities = new Capabilities(SCHEMA_VERSION);

capabilities.CapabilityFlags =

// OSC should call the GetAutoConfiguredSession method to get a

// configured session for the user.

Capabilities.CAP_SUPPORTSAUTOCONFIGURE |

// OSC should hide all links in the Account configuration dialog box.

Capabilities.CAP_HIDEHYPERLINKS |

Capabilities.CAP_HIDEREMEMBERMYPASSWORD |

// The following activity settings identify that Activities uses

// hybrid synchronization.

// OSC will store activities for friends in a hidden folder and

// activities for non-friends in memory.

Capabilities.CAP_GETACTIVITIES |

Capabilities.CAP_DYNAMICACTIVITIESLOOKUP |

Capabilities.CAP_DYNAMICACTIVITIESLOOKUPEX |

Capabilities.CAP_CACHEACTIVITIES |

// The following Friends settings identify that friend information

// uses hybrid synchronization.

// OSC will call the GetPeopleDetails method every time the People Pane

// is refreshed to ensure the latest user information is displayed.

Capabilities.CAP_GETFRIENDS |

Capabilities.CAP_DYNAMICCONTACTSLOOKUP |

Capabilities.CAP_CACHEFRIENDS |

// The following Friends settings identify that OfficeTalks supports

// the FollowPerson and UnFollowPerson calls.

Capabilities.CAP_DONOTFOLLOWPERSON |

Capabilities.CAP_FOLLOWPERSON;

// Set the email HashFunction.

// Setting the EmailHashFunction is required if CAP_DYNAMICCONTACTSLOOKUP

// or CAP_DYNAMICACTIVITIESLOOKUPEX are set.

capabilities.EmailHashFunction = HashFunction.SHA1;

// Set the capabilities property on the providerData object.

providerData.ProviderCapabilities = capabilities;

The capabilities and requirements defined in the preceding code example are specific to OfficeTalk. A custom OSC provider that is developed for a different social network must define a set of capabilities and requirements that are specific to that social network.

The following list shows the CapabilityFlag constants that are available in the OSC Provider Proxy Library Capabilities class.

- CAP_SUPPORTSAUTOCONFIGURE

- The provider supports calling the ISocialProvider.GetAutoConfiguredSession method to attempt automatic configuration of the network for the user.

- CAP_GETFRIENDS

- The provider supports the ISocialPerson.GetFriendsAndColleagues or ISocialSession2.GetPeopleDetails method. The OSC uses the CAP_CACHEFRIENDS and CAP_DYNAMICCONTACTSLOOKUP settings to determine whether friends are stored as Outlook contact items or are stored in memory.

- CAP_CACHEFRIENDS

- The provider supports storing friends as Outlook contact items in a social-network-specific contacts folder.

- CAP_DYNAMICCONTACTSLOOKUP

- The provider supports the ISocialSession2.GetPeopleDetails method for on-demand synchronization of friends and non-friends. If CAP_DYNAMICCONTACTSLOOKUP is set, the OSC calls the ISocialSession2.GetPeopleDetails method every time the People Pane is refreshed.

- CAP_SHOWONDEMANDCONTACTSWHENMINIMIZED

- Indicates that the OSC should carry out on-demand synchronization for friends and non-friends when the People Pane is minimized.

- CAP_FOLLOWPERSON

- The provider supports the ISocialSession.FollowPerson method for adding the person as a friend on the social network.

- CAP_DONOTFOLLOWPERSON

- The provider supports the ISocialSession.UnFollowPerson method for removing the person as a friend on the social network.

- CAP_GETACTIVITIES

- The provider supports the ISocialPerson.GetActivities or ISocialSession2.GetActivitiesEx method. The OSC uses the CAP_CACHEACTIVITIES and CAP_DYNAMICACTIVITIESLOOKUPEX settings to determine whether activities are stored as Outlook RSS items or are stored in memory.

- CAP_CACHEACTIVITIES

- The provider supports storing activities as Outlook RSS items in a hidden News Feed folder. To support cached synchronization of activities CAP_CACHEACTIVITIES should be set and CAP_DYNAMICACTIVITIESLOOKUPEX should not be set. With cached synchronization of activities, the OSC stores all activities as Outlook RSS items in a hidden News Feed folder. To support hybrid synchronization of activities, both CAP_CACHEACTIVITIES and CAP_DYNAMICACTIVITIESLOOKUPEX should be set. With hybrid synchronization of activities, the OSC stores activities for friends as Outlook RSS items in a hidden News Feed folder and caches activities for non-friends in memory. To support on-demand synchronization of activities, CAP_CACHEACTIVITIES should not be set and CAP_DYNAMICACTIVITIESLOOKUPEX should be set. With on-demand synchronization of activities, the OSC caches all activities in memory.

- CAP_DYNAMICACTIVITIESLOOKUP

- Deprecated in OSC 1.1. Use the CAP_DYNAMICACTIVITIESLOOKUPEX setting instead.

- CAP_DYNAMICACTIVITIESLOOKUPEX

- The provider supports the ISocialSession2.GetActivitiesEx method for on-demand or hybrid synchronization of activities. To support on-demand synchronization of activities, CAP_DYNAMICACTIVITIESLOOKUPEX should be set and CAP_CACHEACTIVITIES should not be set. With on-demand synchronization of activities, the OSC calls ISocialSession2.GetActivitiesEx every time the People Pane is refreshed. To support hybrid synchronization of activities, both CAP_DYNAMICACTIVITIESLOOKUPEX and CAP_CACHEACTIVITIES should be set. With hybrid synchronization of activities, the OSC calls ISocialSession2.GetActivitiesEx every 30 minutes to refresh activities information. When CAP_DYNAMICACTIVITIESLOOKUPEX is not set, the OSC does not call ISocialSession2.GetActivitiesEx.

- CAP_SHOWONDEMANDACTIVITIESWHENMINIMIZED

- Indicates that the OSC should carry out on-demand synchronization for activities when the People Pane is minimized.

- CAP_DISPLAYURL

- Indicates that the OSC should display the network URL in the account configuration dialog box.

- CAP_HIDEHYPERLINKS

- Indicates that the OSC should hide the “Click here to create an account” and the “Forgot your password?” hyperlinks in the account configuration dialog box.

- CAP_HIDEREMEMBERMYPASSWORD

- Indicates that the OSC should hide the Remember my password check box in the account configuration dialog box.

- CAP_USELOGONWEBAUTH

- Indicates that the OSC should use forms-based authentication. When CAP_USELOGONWEBAUTH is set, the OSC uses forms-based authentication and calls the ISocialSession.LogonWeb method. When CAP_USELOGONWEBAUTH is not set, the OSC uses basic authentication and calls the ISocialSession.Logon method.

- CAP_USELOGONCACHED

- The provider supports the ISocialSession2.LogonCached method to log on with cached credentials. When CAP_USELOGONCACHED is set, the OSC ignores the CAP_USELOGONWEBAUTH setting and calls ISocialSession2.LogonCached for authentication.

Overriding the GetMe Method

Many of the OSC interface members and OSC Provider Proxy Library override methods require information about the current user. The OSC Provider Proxy Library provides the GetMe abstract method, which you can override to return information about the current user from the social network. The GetMe abstract method returns a Person object, which contains all social network data for the current user.

The GetMe override method shown in the following example gets an OfficeTalkClient object to communicate with OfficeTalk. The GetMe override method then calls the OfficeTalk GetUser method by using the user name that is used to log on to Windows. After obtaining the OfficeTalk User, the GetMe override method calls the OfficeTalkHelper ConvertUserToPerson method to convert the OfficeTalk User to a Person that can be used within the OSC Provider Proxy Library.

After the conversion is complete, the GetMe override method sets the Person.UserName property for the ISocialSession.LoggedOnUserName interface member. Only the GetMe override method sets the Person.UserName property when it returns information about the current user.

// OSC Proxy Library override method used to return information

// for the current user.

public override Person GetMe()

{

// Get a reference to the OfficeTalk client.

OfficeTalkClient officeTalkClient =

OfficeTalkHelper.GetOfficeTalkClient();

// Look up the user based on credentials used to log on to Windows.

OTUser user =

officeTalkClient.GetUser(System.Environment.UserName, Format.JSON);

// Convert the OfficeTalk User to an OSC Provider Proxy Person.

Person p = OfficeTalkHelper.ConvertUserToPerson(user);

// Set the UserName property.

// This is used only by the Person that the GetMe method returns to

// support the OSC ISocialSession.LoggedOnUserName property.

p.UserName = System.Environment.UserName;

return p;

}

Overriding OSC Provider Proxy Library Friends Methods

A custom OSC provider that uses the OSC Provider Proxy Library must override the abstract and virtual methods for returning friends social network data. In the sample solution, the overrides for these OTProvider methods are located in the OTProvider_Friends source file.

The abstract and virtual methods for friends are as follows:

- GetPeopleDetails—Returns detailed user information for the email addresses that are passed into the method.

- GetFriends—Returns a list of friends for the current user.

- FollowPersonEx—Adds the person who is identified by the email address as a friend on the social network.

- UnFollowPerson—Removes the person who is identified by the user ID as a friend on the social network.

Reviewing these methods is outside of the scope of this Visual How To. For more information about returning friends social network data, see Part 2: Getting Friends Information by Using the Proxy Library for Outlook Social Connector Provider Extensibility.

Overriding OSC Provider Proxy Library Activity Methods

A custom OSC provider that uses the OSC Provider Proxy Library must override the abstract and virtual methods for returning activity social network data. In the sample solution, the overrides for these OTProvider methods are located in the OTProvider_Activities source file.

There is only one method to override for activities:

- GetActivities—Returns activities for all users who are identified by the email addresses that are passed into the method.

Covering these methods in detail is outside of the scope of this Visual How To. For more information about returning activities social network data, see Part 3: Getting Activities Information by Using the Proxy Library for Outlook Social Connector Provider Extensibility Visual How To.

Creating a custom Outlook Social Connector (OSC) provider for a social network is a straightforward process of implementing the OSC Provider extensibility interfaces to return social network data.

The OSC Provider Proxy Library simplifies this process by removing the requirement to implement each individual interface member. Instead the OSC Provider Proxy Library defines a consolidated set of abstract and virtual methods to provide social network data. The developer of the OSC provider can focus on overriding these methods with the business logic required to interface with the social network API.

The sample solution for this article includes all of the code required for a custom OSC provider for OfficeTalk. This Visual How To does not cover all of the code in the sample solution. This Visual How To focuses on creating a custom OSC provider solution, and returning information about the OSC provider, the social network capabilities, and the current user. The social network data that the OfficeTalk provider returns is shown in Figure 2.

Figure 2. OSC showing OfficeTalk social network data in the People Pane

For more information about returning friends social network data, see Part 2: Getting Friends Information by Using the Proxy Library for Outlook Social Connector Provider Extensibility.

For more information about returning activities social network data, see Part 3: Getting Activities Information by Using the Proxy Library for Outlook Social Connector Provider Extensibility.

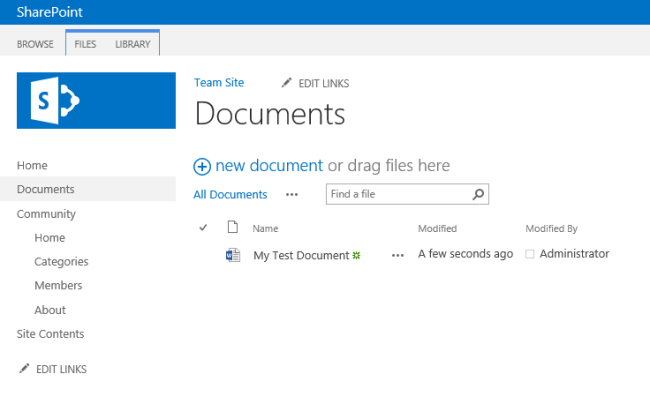

Create new SharePoint 2013 empty project

Create new SharePoint 2013 empty project Deploy the SharePoint site as a farm solution

Deploy the SharePoint site as a farm solution SharePoint solution items

SharePoint solution items Adding new item to SharePoint solution

Adding new item to SharePoint solution Adding new item of type site column to the solution

Adding new item of type site column to the solution Collapse | Copy Code

Collapse | Copy Code Adding new item of type Content Type to SharePoint solution

Adding new item of type Content Type to SharePoint solution Specifying the base type of the content type

Specifying the base type of the content type Adding columns to the content type

Adding columns to the content type Adding new module to SharePoint solution.

Adding new module to SharePoint solution.

Adding existing content type to the pages library

Adding existing content type to the pages library Selecting the content type to add it to pages library

Selecting the content type to add it to pages library Adding new document of the news content type to pages library

Adding new document of the news content type to pages library Creating new page of news content type to pages library.

Creating new page of news content type to pages library.

![ppt_img[1]](https://sharepointsamurai.files.wordpress.com/2014/10/ppt_img1.gif?w=300&h=225)

![sap_integration_en_round[1]](https://sharepointsamurai.files.wordpress.com/2014/09/sap_integration_en_round1.jpg?w=300&h=155)

Note

Note![sap_integration_en_round[2]](https://sharepointsamurai.files.wordpress.com/2014/09/sap_integration_en_round2.jpg?w=300&h=155)

Important

Important

![bottlenecks[1]](https://sharepointsamurai.files.wordpress.com/2014/09/bottlenecks1.jpg?w=300&h=196)

![kaizen%20not%20kaizan[1]](https://sharepointsamurai.files.wordpress.com/2014/09/kaizen20not20kaizan1.png?w=300&h=289)

![IC648720[1]](https://sharepointsamurai.files.wordpress.com/2014/09/ic6487201.gif?w=300&h=181)

Note

Note

![8322.sharepoint_2D00_2010_5F00_4855E582[1]](https://sharepointsamurai.files.wordpress.com/2014/07/8322-sharepoint_2d00_2010_5f00_4855e5821.jpg?w=474&h=261)

Tip:

Tip:  Note:

Note:

![activity diagram uml+activity+diagram+library+mgmt+book+return[1]](https://sharepointsamurai.files.wordpress.com/2014/06/umlactivitydiagramlibrarymgmtbookreturn1-e1403235154656.jpg?w=474)

![ImageGen[1]](https://sharepointsamurai.files.wordpress.com/2014/06/imagegen1.png?w=474)

+

+ ![microsoft_crm_wallpaper_02[1]](https://sharepointsamurai.files.wordpress.com/2014/06/microsoft_crm_wallpaper_021.jpg?w=167&h=125)

![ImageGen[1]](https://sharepointsamurai.files.wordpress.com/2014/06/imagegen1.png?w=168&h=174)

One of the core concepts of Business Connectivity Services (BCS) for

One of the core concepts of Business Connectivity Services (BCS) for

![sap2[1]](https://sharepointsamurai.files.wordpress.com/2014/05/sap21.jpg?w=300&h=148)

![Outlook.com_[1]](https://sharepointsamurai.files.wordpress.com/2014/04/outlook-com_1.jpg?w=300&h=189)

![designmanager[1]](https://sharepointsamurai.files.wordpress.com/2014/03/designmanager1.png?w=300&h=169)