The following requirements for procedural fairness should be met:

- An employer must inform the employee of allegations in a manner the employee can understand

- The employee should be allowed reasonable time to prepare a response to the allegations

- The employee must be given an opportunity to state his/ her case during the proceedings

- An employee has the right to be assisted by a shop steward or other employee during the proceedings

- The employer must inform the employee of a decision regarding a disciplinary sanction, preferably in writing- in a manner that the employee can understand

- The employer must give clear reasons for dismissing the employee

- The employer must keep records of disciplinary actions taken against each employee, stating the nature of misconduct, disciplinary action taken and the reasons for the disciplinary action

Procedural Fairness

Even if there are valid substantive reasons for a dismissal, an employer must follow a fair procedure before dismissing the employee. Procedural fairness may in fact be regarded as the “rights” of the worker in respect of the actual procedure to be followed during the process of discipline or dismissal.

Procedural Fairness: Misconduct

The following requirements for procedural fairness should be met:

- An employer must inform the employee of allegations in a manner the employee can understand

- The employee should be allowed reasonable time to prepare a response to the allegations

- The employee must be given an opportunity to state his/ her case during the proceedings

- An employee has the right to be assisted by a shop steward or other employee during the proceedings

- The employer must inform the employee of a decision regarding a disciplinary sanction, preferably in writing- in a manner that the employee can understand

- The employer must give clear reasons for dismissing the employee

- The employer must keep records of disciplinary actions taken against each employee, stating the nature of misconduct, disciplinary action taken and the reasons for the disciplinary action

Procedural and substantive fairness.

The areas of procedural and substantive fairness most often exist in the minds of employers, H.R. personnel and even disciplinary or appeal hearing Chairpersons as no more than a swirling, gray thick fog.This is not a criticism – it is a fact.

Whether or not a dismissal has been effected in accordance with a fair procedure and for a fair reason is very often not established with any degree of certainty beyond ” I think so” or “it looks o.k. to me.”What must be realized is that the LRA recognizes only three circumstances under which a dismissal may be considered fair – misconduct, incapacity (including poor performance) and operational requirements (retrenchments.)

This, however, does not mean that a dismissal effected for misconduct, incapacity or operational requirements will be considered automatically fair by the CCMA should the fairness of the dismissal be disputed. In effecting a dismissal under any of the above headings, it must be further realized that, before imposing a sanction of dismissal, the Chairperson of the disciplinary hearing must establish (satisfy himself in his own mind) that a fair procedure has been followed.

When the Chairperson has established that a fair procedure has been followed, he must then examine the evidence presented and must decide, on a balance of probability, whether the accused is innocent or guilty.

If the accused is guilty, the Chairperson must then decide what sanction to impose. If the Chairperson decides to impose a sanction of dismissal, he must decide, after considering all the relevant factors, whether the dismissal is being imposed for a fair reason.The foregoing must be seen as three distinct procedures that the Chairperson must follow, and he/she must not even consider the next step until the preceding step has been established or finalized.

The three distinct steps are:

1. Establish, by an examination of the entire process, from the original complaint to the adjournment of the Disciplinary Hearing, that a fair procedure has been followed by theemployer and that the accused has not been compromised or prejudiced by any unfair actions on the part of the employer. Remember that at the CCMA, the employer must prove that a fair procedure was followed. The Chairperson must not even think about “guilty or not guilty” before it has been established that a fair procedure has been followed.

2. If a fair procedure has been followed, then the Chairperson can proceed to an examination of the minutes and the evidence presented to establish guilt or innocence.

3. If guilty is the verdict, the Chairperson must now decide on a sanction. Here, the Chairperson must consider several facts in addition to the evidence. He/she must consider the accused’s length of service, his previous disciplinary record, his personal circumstances, whether the sanction of dismissal would be consistent with previous similar cases, the circumstances surrounding the breach of the rule, and so on.

The Chairperson must consider all the mitigating circumstances (those circumstances in the favor of the employee which may include the age of the employee, length of service, his state of health, how close is he to retirement, his position in the company, his financial position, does he show any remorse and if so to what degree, his level of education, is he prepared to make restitution if this is possible, did he readily plead guilty and confess)

The Chairperson must consider all the aggravating circumstances (those circumstances that count against the employee, such as the seriousness of the offense seen in the light of the employee’s length of service, his position in the company, to what degree did any element of trust exist in this employment relationship, etc.) The Chairperson must also consider all the extenuating circumstances (circumstances such as self defense, provocation, coercion – was he “egged on” by others? Lack of intent, necessity etc.)

The Chairperson must allow the employee to plead in mitigation and must consider whether a lesser penalty would suffice. Only after careful consideration of all this, can the Chairperson arrive at a decision of dismissal and be perfectly satisfied in his own mind that the dismissal is being effected for a fair reason. For all the above reasons, I submit that any Chairperson who adjourns a disciplinary hearing for anything less than 3 days has not done his job and has no right to act as Chairperson. A Chairperson who returns a verdict and sanction after adjourning for 10 minutes or 1 hour quite obviously has pre-judged the issue, has been instructed by superiors to dismiss the hapless employee, and acts accordingly.

Such behaviour by a Chairperson is an absolute disgrace, is totally unacceptable, and the Chairperson should be the one to be dismissed. The following is a brief summary of procedural and substantive fairness in cases of misconduct, incapacity and operational requirements dismissals. This is not intended to be exhaustive or complete – employers must still follow what is written in other modules.

The following procedural fairness checklist will apply to all disciplinary hearings, whether for misconduct, incapacity or operational requirements dismissals. Remember also that procedural fairness applies even if the sanction is only a written warning.

Procedural Quicklist. (have we followed a fair procedure ?)

- Original complaint received in writing.

- Complaint fully investigated and all aspects of investigation recorded in writing.

- Written statements taken down from complainant and all witnesses.

- Accused advised in writing of date, time and venue of disciplinary hearing.

- Accused to have reasonable time in which to prepare his defense and appoint his representative.

- Accused advised in writing of the full nature and details of the charge/s against him.

- Accused advised in writing of his/her rights.

- Complainant provides copies of all written statements to accused.

- Chairperson appointed from outside the organization.

- Disciplinary hearing is held.

- Accused given the opportunity to plead to the charges.

- Complainant puts their case first, leading evidence and calling witnesses to testify.

- Accused is given opportunity to cross question witnesses.

- Accused leads evidence in his defense.

- Accused calls his witnesses to testify and complainant is given opportunity to cross question accused’s witnesses.

- Chairperson adjourns hearing for at least 3 days to have minutes typed up or transcribed.

- Accused is immediately handed a copy of the minutes.

- Chairperson considers whether a fair procedure has been followed.

- Chairperson decides on guilt or innocence based on the evidence presented by both sides and on the balance of probability – which story is more likely to be true? That of the complainant or that of the accused? That is the basis on which guilt or innocence is decided. In weighing up the balance of probability, the previous disciplinary record of the accused, his personal circumstances, his previous work record, mitigating circumstances etc are all EXCLUDED from the picture – these aspects are considered only when deciding on a suitable and fair sanction. The decision on guilt or innocence is decided only on the basis of evidence presented and in terms of the balance of probability.

- Chairperson reconvenes the hearing.

- Chairperson advises accused of guilty verdict. If not guilty, this is confirmed in writing to the accused and the matter is closed. If guilty, then :

- Chairperson asks accused if he/she has anything to add in mitigation of sentence.

- Chairperson adjourns meeting again to consider any mitigating facts now added.

- Chairperson considers and decides on a fair sanction.

- Chairperson reconvenes hearing and delivers the sanction.

- Chairperson advises the accused of his/her rights to appeal and to refer the matter to the CCMA.

All communications to the accused, such as the verdict, the sanction, advice of his/her rights etc, must be reduced to writing.

Substantive Fairness – Misconduct (is my reason good enough to justify dismissal ??)

- Was a company rule, or policy, or behavioral standard broken ?

- If so, was the employee aware of the transgressed rule, standard or policy or could the employee be reasonably expected to have been aware of it? (You cannot discipline an employee for a breaking a rule if he was never aware of the rule in the first place.)

- Has this rule been consistently applied by the employer?

- Is dismissal an appropriate sanction for this transgression?

- In other cases of transgression of the same rule, what sanction was applied?

- Take the accused’s personal circumstances into consideration.

- Consider also the circumstances surrounding the breach of the rule.

- Consider the nature of the job.

- Would the sanction now to be imposed be consistent with previous similar cases?

Substantive Fairness – Incapacity – Poor Work Performance.

Examples : incompetence – lack of skill or knowledge ; insufficiently qualified or experienced. Incompatibility – bad attitude ; carelessness ; doesn’t “fit in.” inaccuracies – incomplete work ; poor social skills ; failure to comply with or failure to reach reasonable and attainable standards of quality and output.

Note : deliberate poor performance as a means of retaliation against the employer for whatever reason is misconduct and not poor performance.

- Was there a material breach of specified work standards?

- If so, was the accused aware of the required standard or could he reasonably be expected to have been aware of the standard?

- Was the breached standard a reasonable and attainable standard?

- Was the required standard legitimate and fair?

- Has the standard always been consistently applied?

- What is the degree of sub-standard performance? Minor? Major? Serious? Unacceptable?

- What damage and what degree of damage (loss ) has there been to the employer?

- What opportunity has been given to the employee to improve?

- What are the prospects of acceptable improvement in the future?

- Consider training, demotion or transfer before dismissing.

Incapacity – Poor Work Performance – additional notes on Procedural fairness.

If the employee is a probationer, ensure that sufficient instruction and counseling is given. If there is still no improvement then the probationer may be dismissed without a formal hearing. If the employee is not a probationer, ensure that appropriate instruction, guidance, training and counselling is given. This will include written warnings.

Make sure that a proper investigation is carried out to establish the reason for the poor work performance, and establish what steps the employer must take to enable the employee to reach the required standard. Formal disciplinary processes must be followed prior to dismissal.

Substantive Fairness – Incapacity – Ill Health.

-

Establish whether the employee’s state of health allows him to perform the tasks that he was employed to carry out.

-

Establish the extent to which he is able to carry out those tasks.

-

Establish the extent to which these tasks may be modified or adapted to enable the employee to carry out the tasks and still achieve company standards of quality and quantity.

-

Determine the availability of any suitable alternative work.

If nothing can be done in any of the above areas, dismissal on grounds of incapacity – ill health – would be justified.

Incapacity – Ill Health – additional notes on Procedural fairness.

-

With the employee’s consent, conduct a full investigation into the nature of and extent of the illness, injury or cause of incapacity.

-

Establish the prognosis – this would entail discussions with the employee’s medical advisor.

-

Investigate alternative to dismissal – perhaps extended unpaid leave ?

-

Consider the nature of the job.

-

Can the job be done by a temp until the employee’s health improves?

-

Remember the employee has the right to be heard and to be represented.

Operational Requirements – retrenchments.

All the steps of section 189 of the LRA must be followed. Quite obviously, the reason for the retrenchments must be based on the restructuring or resizing of a business, the closing of a business, cost reduction, economic reasons – to increase profit, reduce operating expenses, and so on, or technological reasons such as new machinery having replaced 3 employees and so on.

Re-designing of products, reduction of product range and redundancy will all be reasons for retrenchment. The employer, however, must at all times be ready to produce evidence to justify the reasons on which the dismissals are based.

The most important aspects of procedural fairness would be steps taken to avoid the retrenchments, steps taken to minimize or change the timing of the retrenchments, the establishing of valid reasons, giving prior and sufficient notice to affected employees, proper consultation and genuine consensus-seeking consultations with the affected employees and their representatives, discussion and agreement on selection criteria, offers of re-employment and discussions with individuals.

Substantive Fairness

Jan du Toit

In deciding whether to dismiss an employee the employer must take code 8 of the Labour relations Act into consideration. Schedule 8 is a code of good practice on dismissing employees and serves as a guideline on when and how an employer may dismiss an employee. An over simplified summary of schedule 8 would be that the employer may dismiss an employee for a fair reason after following a fair procedure. Failure to do so may render the dismissal procedurally or substantively (or both) unfair and could result in compensation of up to 12 months of the employee’s salary or reinstatement.

Procedural fairness refers to the procedures followed in notifying the employee of the disciplinary hearing and the procedures followed at the hearing itself. Most employers do not have a problem in this regard but normally fails dismally when it comes to substantive fairness. The reason for this is that substantive fairness can be split into two elements namely;

- establishing guilt; and

- deciding on an appropriate sanction.

This seems straight forward but many employers justify a dismissal based solely on the fact that the employee was found guilty of an act of misconduct. This is clearly contrary to the guidelines of schedule 8: “Dismissals for misconduct”

Generally, it is not appropriate to dismiss an employee for a first offence, except if the misconduct is serious and of such gravity that it makes a continued employment relationship intolerable. Examples of serious misconduct, subject to the rule that each case should be judged on its merits, are gross dishonesty or wilful damage to the property of the employer, wilful endangering of the safety of others, physical assault on the employer, a fellow employee, client or customer and gross insubordination. Whatever the merits of the case for dismissal might be, a dismissal will not be fair if it does not meet the requirements of section 188.

When deciding whether or not to impose the penalty of dismissal, the employer should in addition to the gravity of the misconduct consider factors such as the employee’s circumstances (including length of service, previous disciplinary record and personal circumstances), the nature of the job and the circumstances of the infringement itself.”

Schedule 8 further prescribes that;

Any person who is determining whether a dismissal for misconduct is unfair should consider-

(a) whether or not the employee contravened a rule or standard regulating conduct in, or of relevance to, the workplace; and

(b) if a rule or standard was contravened, whether or not-

(i) the rule was a valid or reasonable rule or standard;

(ii) the employee was aware, or could reasonably be expected to have been aware, of the rule or standard;

(iii) the rule or standard has been consistently applied by the employer; and

(iv) dismissal was an appropriate sanction for the contravention of the rule or standard.

Looking at the above it is clear that substantive fairness means that the employer succeeded in proving that the employee is guilty of an offence and that the seriousness of the offence outweighed the employee’s circumstances in mitigation and that terminating the employment relationship was fair.

The disciplinary hearing will not end with a verdict of guilty, the employer will have to in addition to proving guilt, raise circumstances in aggravation for the chairman to consider a more severe sanction. The employee must be on the other hand given the opportunity to raise circumstances in mitigation for a less severe sanction.

Many employers make the mistake and rely on the fact that arbitration after a dismissal will be de novo and focus their case at the ccma solely on proving that the employee is guilty of misconduct, foolishly believing that the commissioner will agree that a dismissal was fair under circumstances. The Labour Appeal Court in County Fair Foods (Pty) Ltd v CCMA & others (1999) 20 ILJ 1701 (LAC) at 1707 (paragraph 11) [also reported at [1999] JOL 5274 (LAC)], said that it was “not for the arbitrator to determine de novo what would be a fair sanction in the circumstances, but rather to determine whether the sanction imposed by the appellant (employer) was fair”. The court further explained:

“It remains part of our law that it lies in the first place within the province of the employer to set the standard of conduct to be observed by its employees and determine the sanction with which non-compliance with the standard will be visited, interference therewith is only justified in the case of unreasonableness and unfairness. However, the decision of the arbitrator as to the fairness or unfairness of the employer’s decision is not reached with reference to the evidential material that was before the employer at the time of its decision but on the basis of all the evidential material before the arbitrator. To that extent the proceedings are a hearing de novo.”

In NEHAWU obo Motsoagae / SARS (2010) 19 CCMA 7.1.6 the commissioner indicated that “the notion that it is not necessary for an employer to call as a witness the person who has taken the ultimate decision to dismiss or to lead evidence about the dismissal procedure, can therefore not be endorsed. The arbitrating commissioner clearly does not conduct a de novo hearing in the true sense of the word and he is enjoined to judge “whether what the employer did was fair.” The employer carries the onus of proving the fairness of a dismissal and it follows that it is for the employer to place evidence before the commissioner that will enable the latter to properly judge the fairness of his actions.”

In this particular case referred to above Mr. Motsoagae, a Revenue Admin Officer for SARS, destroyed confiscated cigarettes that were held in the warehouses of the State without the necessary permission. Some of these cigarettes found its way onto the black-market after he allegedly destroyed them and was subsequently charged with theft. Interestingly the commissioner agreed with the employer that the applicant in this matter, Mr. Motsoagae, was indeed guilty of the offence but still found that the dismissal was substantively unfair. The commissioner justified his decision referring to an earlier important Labour Court finding, reemphasizing the onus on the employer to prove that the trust relationship has been destroyed and that circumstances in aggravation, combined with the seriousness of the offence, outweighed the circumstances the employee may have raised in mitigation, thus justifying a sanction of dismissal.

“The respondent in casu did not bother to lead any evidence to show that dismissal had been the appropriate penalty in the circumstances and it is not known which “aggravating” or “mitigating” factors (if any) might have been taken into consideration. It is also not known whether any evidence had been led to the effect that the employment relationship between the parties had been irreparably damaged – the Labour Court in SARS v CCMA & others (2010) 31 ILJ 1238 (LC) at 1248 (paragraph 56) [also reported at [2010] 3 BLLR 332 (LC)], held that a case for irretrievable breakdown should, in fact, have been made out at the disciplinary hearing.

The respondent’s failure to lead evidence about the reason why the sanction of dismissal was imposed leaves me with no option but to find that the respondent has not discharged the onus of proving that dismissal had been the appropriate penalty and that the applicant’s dismissal had consequently been substantively unfair.

The respondent at this arbitration, in any event, also led no evidence to the effect that the employment relationship had been damaged beyond repair. The Supreme Court of Appeal in Edcon Ltd v Pillemer NO & others (2009) 30 ILJ 2642 (SCA) at 2652 (paragraph 23) [also reported at [2010] 1 BLLR 1 (SCA) – Ed], held as follows:

“In my view, Pillemer’s finding that Edcon had led no evidence showing the alleged breakdown in the trust relationship is beyond reproach. In the absence of evidence showing the damage Edcon asserts in its trust relationship with Reddy, the decision to dismiss her was correctly found to be unfair.”

Employers are advised to consider circumstances in aggravation and mitigation before deciding to recommend a dismissal as appropriate sanction. In addition to this the employer will have to prove that the trust relationship that existed between the parties deteriorated beyond repair or that the employee made continued employment intolerable. Employers should also remember that there are three areas of fairness to prove to the arbitrating commissioners; procedural fairness, substantive fairness – guilt, substantive fairness – appropriateness of sanction.

Employers are advised to make use of the services of experts in this area in order to ensure both substantive and procedural fairness.

Contact Jan Du Toit – jand@labourguide.co.za

![sap_integration_en_round[1]](https://sharepointsamurai.files.wordpress.com/2014/09/sap_integration_en_round1.jpg?w=300&h=155)

Note

Note![sap_integration_en_round[2]](https://sharepointsamurai.files.wordpress.com/2014/09/sap_integration_en_round2.jpg?w=300&h=155)

Important

Important

![DevOps-Cloud[1]](https://sharepointsamurai.files.wordpress.com/2014/09/devops-cloud1.jpg?w=300&h=188)

![4503.DevOps_2D00_Barriers_5F00_1C41B571[1]](https://sharepointsamurai.files.wordpress.com/2014/09/4503-devops_2d00_barriers_5f00_1c41b5711.png?w=502&h=278)

![devops[1]](https://sharepointsamurai.files.wordpress.com/2014/09/devops1.png?w=300&h=241)

![DevOps-Lifecycle[1]](https://sharepointsamurai.files.wordpress.com/2014/09/devops-lifecycle1.png?w=536&h=193)

![kaizen%20not%20kaizan[1]](https://sharepointsamurai.files.wordpress.com/2014/09/kaizen20not20kaizan1.png?w=300&h=289)

![IC630180[1]](https://sharepointsamurai.files.wordpress.com/2014/09/ic6301801.gif?w=300&h=90)

![Site-Definition-03.png_2D00_700x0[1]](https://sharepointsamurai.files.wordpress.com/2014/08/site-definition-03_2d00_700x01.png?w=474)

![0880.homepage_2D00_graphics_5F00_7B9FA8B1[1]](https://sharepointsamurai.files.wordpress.com/2014/07/0880-homepage_2d00_graphics_5f00_7b9fa8b11.png?w=474&h=489)

+

+ ![microsoft_crm_wallpaper_02[1]](https://sharepointsamurai.files.wordpress.com/2014/06/microsoft_crm_wallpaper_021.jpg?w=167&h=125)

![Konsort_DocManagement2_Large[1]](https://sharepointsamurai.files.wordpress.com/2014/05/konsort_docmanagement2_large1.jpg?w=474&h=285)

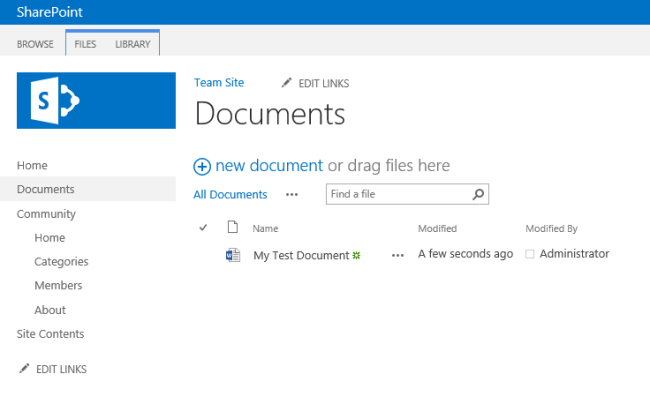

One of the core concepts of Business Connectivity Services (BCS) for

One of the core concepts of Business Connectivity Services (BCS) for

![sap2[1]](https://sharepointsamurai.files.wordpress.com/2014/05/sap21.jpg?w=300&h=148)